Acting under uncertainty

A general approach to respond to uncertainty

Acting under uncertainty

In the previous post, I introduced the concept of uncertainty as the unifying main driver of rethinking IT. I described how it breaks the certainty-based value prediction model that most of our acting (not only) in IT is based upon and why so many people and companies have difficulties to accept and adapt to uncertainty.

In this post, I will continue with discussing the basic approach how to act under uncertainty.

Projects from a financial perspective

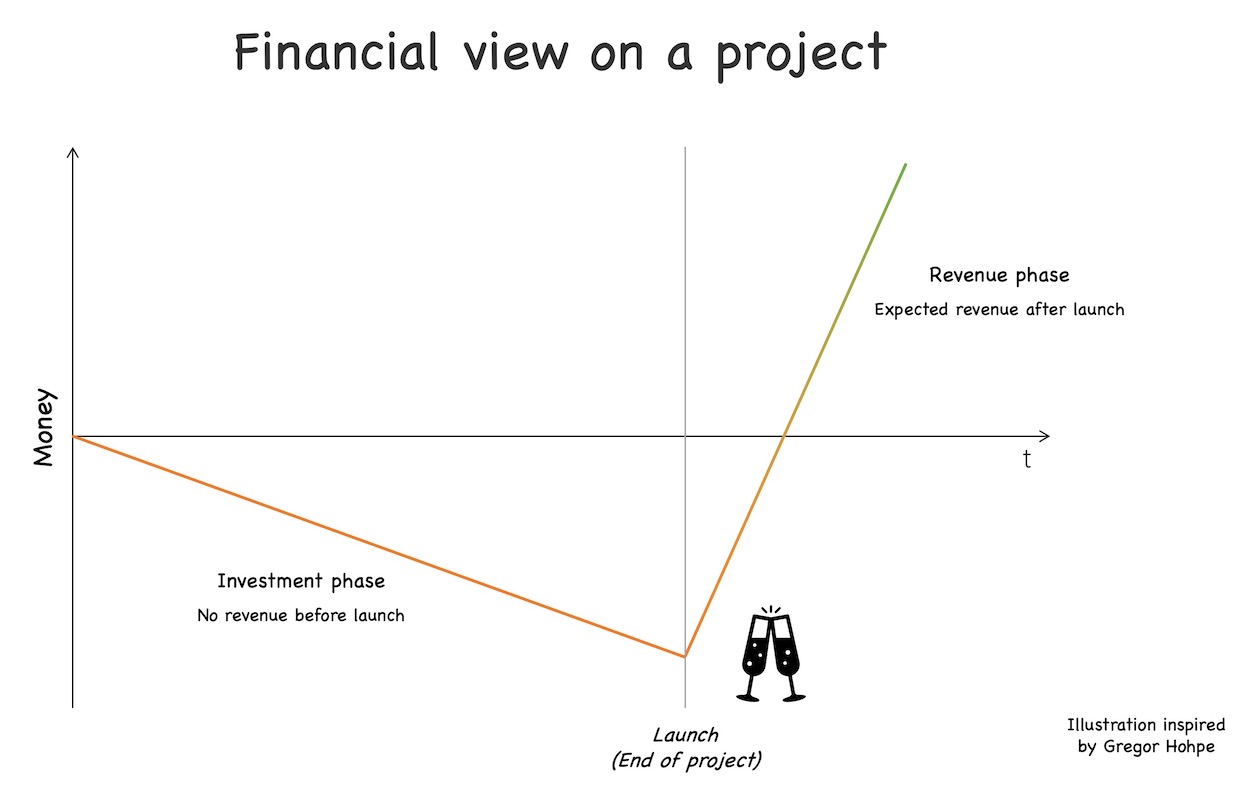

To understand the basic approach better, it makes sense to look at (traditional) projects from a financial perspective.

A project that launches its subject at the end of the project is a pure investment while the project is running. It just costs money. The subject of the project is meant to create value after launch – usually making money – but it is not yet available. At the end of the project the subject – in the case of an IT project usually some new, extended or modified IT solution – eventually gets launched.

After the launch it is expected that the launched solution will make enough money to pay off its costs. This expectation typically is written down in the business plan that usually must be prepared as a prerequisite to the approval of a project. The business plan usually contains figures like the “break even point” (point in time when the accumulated profits offset the preceding investments) or “return on investment (ROI)” (ratio between expected profits and investments). Projects typically only get approved if these (or comparable) financial figures are good enough.

It is important to note that the business plan (or whatever tool is used to assess the expected economic success of a project) is written before the project starts. Sometimes it contains some best case/worst case estimations – which all tend to be positive because otherwise the project would not get approved. But usually that is it. Especially, it does not plan for any feedback loops to understand the market response to the project results.

This kind of value assessment presupposes a certainty-based value prediction model because otherwise it would be impossible to reliably predict the expected revenue and profits.

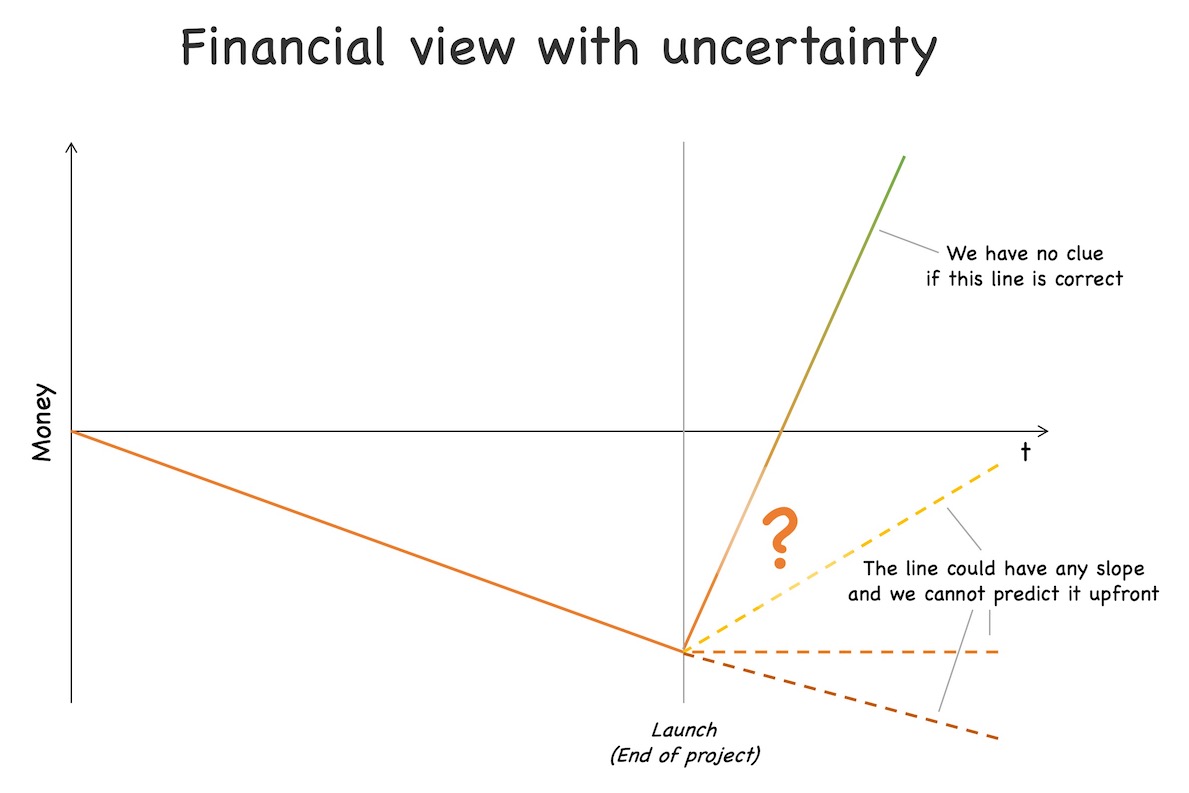

Adding uncertainty

If we add uncertainty to the financial project perspective we get a different picture.

Our business case assumes a certain amount of revenue and profits over time after the launch of the project subject. Yet, under uncertainty we have no idea how revenue and profits will develop as we cannot predict how the customers will respond to the project subject. We only can measure it after the fact, i.e., we need to release the project subject and only then we can observe, how the customers respond to it.

The response could result in the expected curve 1. But it also could be every other curve. It could even be a negative curve, i.e., we destroy value because the customers dislike what we did and lose money. The key point is: Under uncertainty we cannot reliably predict the evolution of the curve upfront.

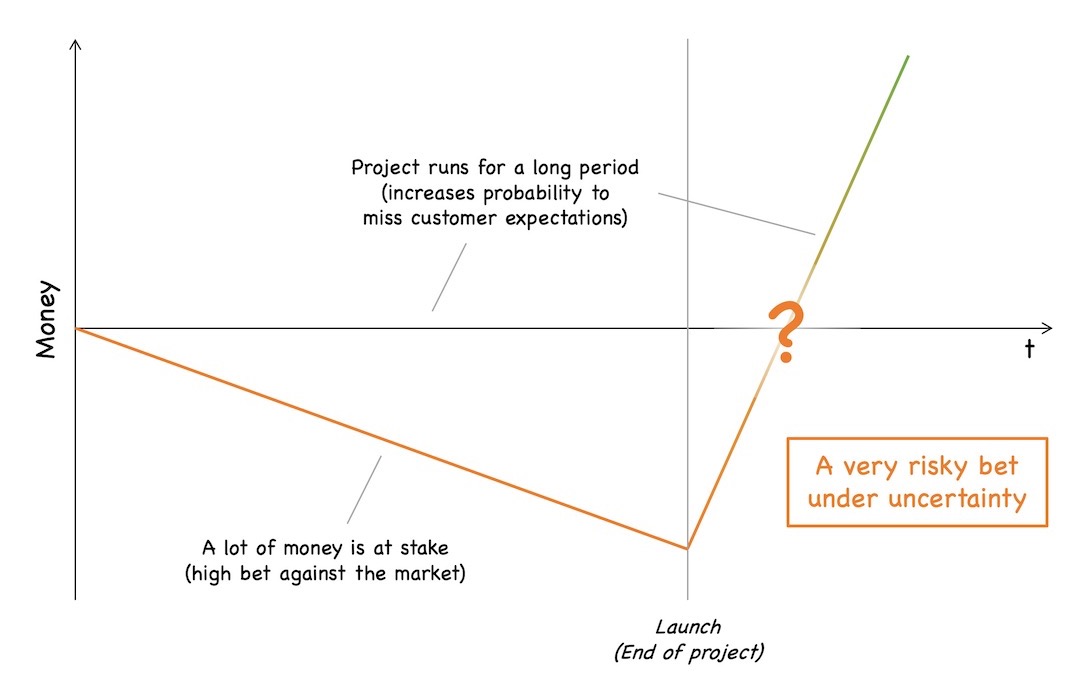

Investments as bets against the market

With that knowledge regarding the effect of uncertainty let us revisit the original financial view on such an all-at-once-delivery project.

As we have no idea upfront regarding the outcome of our project investment, we can consider the investment a bet against the market. We hope hard that the investment we make will make more profit than we invest, but we only know it for sure after the market, i.e., the customers decided. Sometimes we will win our bet, sometimes we will lose it.

The typical long runtime of a project until launch 2 makes the project bet even riskier. The longer the time between an idea and its release to the market, the higher the probability that market needs and expectations will have changed in that time period – especially in highly dynamic post-industrial markets that have become the norm today.

This observation is not only true for whole projects but can be broken down to every single feature that is delivered to the market:

Uncertainty means that every new feature you deliver is a bet against the market.

Thinking in hypotheses

So, any project that only launches its subject at the end of the project is a huge risk. From a risk management perspective this immediately results in the question if we can reduce this risk.

While many companies have not yet found an answer to this question, we find the right idea in common parlance: Do not put all your eggs in one basket. Translated to our context: Do not make a single, big bet. Make many small bets instead.

Yet, splitting the project investment up is not sufficient. It is just a prerequisite. If you split up the project in many small investments, you gain the possibility to learn from the results of an investment and use the learning to control future investments.

The best way to assert learning is to embed the certainty of uncertainty (pun intended!) already into our perception of the contents of our investments: we consider each idea that results in an investment as a hypothesis.

If you stop thinking in requirements and start thinking in hypotheses, it guides you the right way. Requirements tend to be considered certain, i.e., to add value, it is considered sufficient to capture the requirement accurately enough and implement it correctly. The idea that a requirement might be rejected by the users, i.e., does not create or might even destroy value, usually does not come to mind.

A hypothesis on the other hand explicitly includes the option that it may fail, i.e, that the users may falsify the underlying assumption. Thus, if we consider all our ideas as hypotheses, we will start acting very differently.

We will try to figure out the smallest activity that can help us to learn from market feedback if the idea works or not. If the feedback is positive we take the idea a bit further and measure the feedback again. If the feedback is negative, we try something different. This leads to a stepwise approach towards a successful solution.

Under uncertainty stop thinking in requirements and start thinking in hypotheses.

You can write down the basic approach of hypothesis-driven development as an algorithm (omitting the stop conditions for the sake of simplicity):

- Create a hypothesis regarding the effect of an effort

- Do the smallest action suitable to measure an effect

- Measure the effect and evaluate hypothesis

- Further develop hypothesis if expectations were met

- Drop or pivot hypothesis otherwise

- Go back to step 1 (drop or pivot) or 2 (further develop)

Basically, by treating ideas as hypotheses, we explore them in sort of a PDCA approach, i.e., we continuously adapt our course of action based on the learnings of prior experiments 3.

This way, we can identify idle and value-reducing performances, i.e., ideas that customers do not care about or even dislike, early and stop investing in them. Instead, we can use the budget freed up to reinforce ideas that create positive market response. In other words:

Under uncertainty you do not maximize value by optimizing efficiency of efforts (a.k.a. cost efficiency), but by detecting and cutting idle and value-reducing performances as soon as possible.

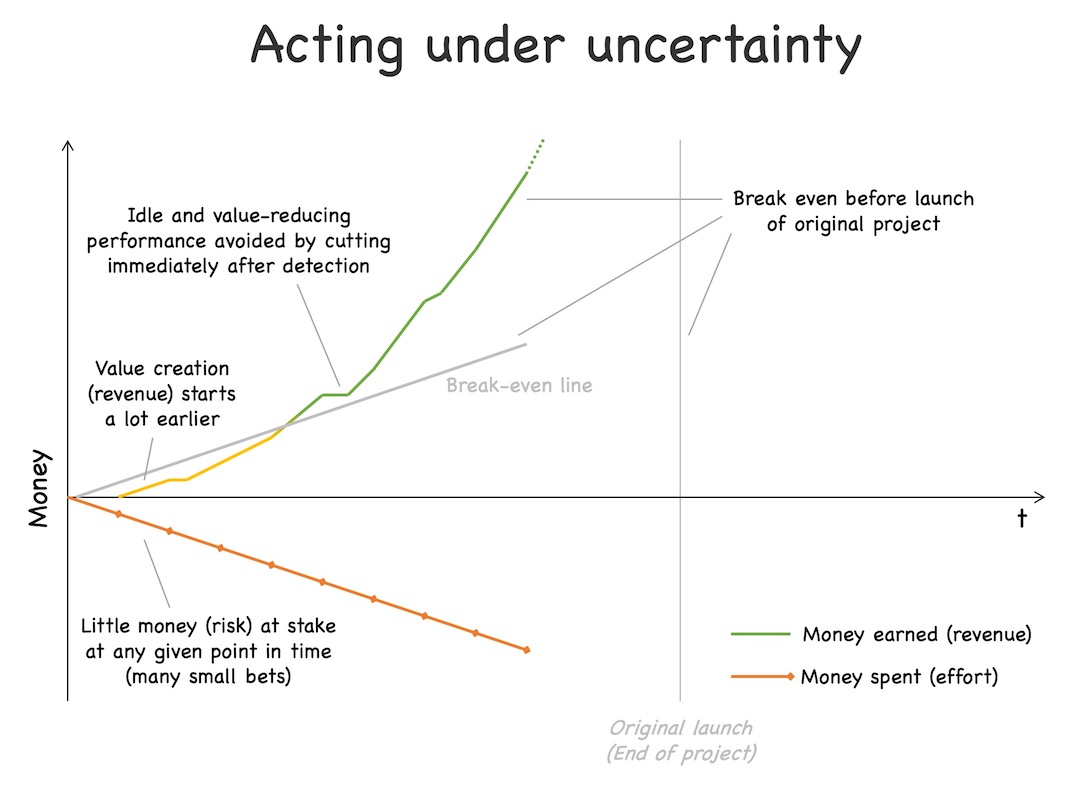

The financial perspective revisited

If we use this hypothesis-driven approach and apply it to the original financial perspective on a project, we end up with a very different picture.

Instead of implementing the subject of the project as a whole before launching it, we split it up in hypotheses which in turn we split up in a series of questions towards the users. The validation of each hypothesis starts with a small question requiring little effort to implement, followed by more questions, each requiring a relatively small effort to implement it, until our whole hypothesis is either validated (all expectations regarding the questions met) or falsified (some expectations not met).

Usually, we do not design the whole series of questions for a hypothesis upfront, but we start with designing the first one or two questions that we need to learn if the hypothesis has any value at all.

Remember that it does not make sense to design all questions upfront because we validate a hypothesis under uncertainty. This means the chances that our hypothesis gets falsified by the users is significant, which in turn means that designing all questions upfront will result in a lot of wasted effort in average because quite often you will discard the hypothesis after the first questions. We also try to design the questions in a way that especially implementing the first questions of a hypothesis results in very little effort to lose as little money as possible if the hypothesis should fail.

Having the hypotheses and initial questions designed, we start implementing initial question for a hypothesis – everything that is needed to get a response from the users that we can learn from.

Then we immediately release what we have implemented so far and observe the response of the users 4. If the response is positive, i.e., the measured values of the used metrics exceed the target values that we have defined in conjunction with our question, we move on to the next question. Otherwise, we rethink our hypothesis as described before. 5

We do the same with all the other hypotheses. Usually, we work at several hypotheses streams in parallel because it takes some time to collect the feedback from the market that we can use to implement questions of other hypotheses.

This approach results in several effects regarding the financial perspective that are very different from the original project perspective:

- By splitting up our implementation in many small chunks, each suitable to answer a small question, and by releasing the system every time we implemented such a chunk, we split up the single big project investment in a long series of small investments. In other words: We make many small bets against the market instead of a single big one.

- We learn very soon if a hypothesis is viable or not, long before we implemented everything required to implement the whole hypothesis. This way, we can identify and cut idle and value-reducing performances early and invest the saved money on successful hypotheses.

- We can test a lot more hypotheses with the same budget than we could with the certainty-based project approach. We can use the money freed up from failed hypotheses to test new ones that were not covered by the original plan and budget. Yet, by continuously learning from market feedback we can dynamically adjust the project contents, only keeping and reinforcing successful ideas.

- We start making money with the project subject a lot earlier than with the original all-at-once-delivery project approach. Revenue will probably not start to flow immediately after releasing the first chunk, but still quite early in the course of the project. As we only reinforce the value-adding performances and strictly cut the other ones, chances are that the users will like the emerging solution a lot and you start to make good money early. This way you do not only have the option to amortize your investments before the launch date of the original all-at-once project, but you can even start to create profits before the project ends.

Additionally, we reduce the project risk drastically compared to the original all-at-once-delivery project approach. We do not put all our eggs in one basket anymore, to come back to the common parlance. To stress the metaphor a bit more, we also test the strength of the baskets every time before we put another egg into it.

We actively manage the risk introduced by uncertainty by splitting up our investments in many small chunks and continuously collect feedback from the market regarding the value of our performances. The key element is that we accept uncertainty and respond to it in a sensible way.

Summing up

In this post we looked at projects from a financial perspective that we used to derive a basic approach to respond to uncertainty. Traditional all-at-once-delivery project approaches are based on the assumption that the project subject will make profit after launch for sure. The underlying assumption is a certainty-based value prediction model.

Under uncertainty this assumption does no longer hold true. The revenue curve after launch could have any slope. It could even be negative and it is not possible to predict the slope upfront. Additionally, the typically long runtime of a project increases the likelihood that the requirements captured upfront will not meet the customer expectations anymore after launch – especially in highly dynamic post-industrial markets.

Uncertainty means that our investments and efforts are basically bets against the market. And a big all-at-once-delivery project means a single big and risky bet with just a small chance to win it in the anticipated way.

To address this risk, it makes sense to work with smaller investments and continuously check if we are still on a good way. A good mental tool for this approach is to think in hypotheses because in contrast to requirements they explicitly include the possibility of being wrong. This mental hack leads to a very different approach of implementing new ideas.

Instead of implementing an idea (captured as a hypothesis) at once, we rather split it up in a series of questions towards the market, each to be implemented with relatively small effort. After each implementation chunk the solution is released to the market and the feedback of the users is collected and evaluated. Only if the feedback is positive the hypothesis is pursued, i.e., the next question gets implemented. Otherwise, the hypothesis will be reconsidered or even dropped.

By continuously posing small questions to the market, observing the responses and learning from them, we can identify and cut off idle and value-reducing performances early and make sure that we only reinforce ideas that meet the customers’ expectations, i.e., will make money.

This is the basic approach how to act under uncertainty: small steps, continuously collecting feedback from the market and actively responding to it.

There are tons of details, variants and pitfalls that I did not mention, but I will leave it here for the moment and come back to them in some later posts.

In the next post, I will discuss that not everything is equally affected by uncertainty and how we need to respond differently to different levels of certainty and uncertainty. Stay tuned …

-

Side note: The expected revenue and profit curves tend to be quite optimistic for most business cases because the creators of business cases usually are interested in getting their projects approved. As a result, in quite some companies writing business cases degenerated to finding the most positive prediction that you can get away with, i.e., that do not make the decision makers suspicious because that maximizes the chances that your project gets approved. ↩︎

-

Also most so-called “agile” projects have a long runtime. The relevant time period is the time from an idea (a “feature”) until the customer sees the result and can provide feedback, called “lead time” or “cycle time” depending on the terminology you prefer. Even if most “agile” projects use short iteration durations, like Scrum “sprints” of 1 or 2 weeks, the subject of the project is usually only released to the customers at the end of the project and not after each iteration. Thus, the lead/cycle time remains the same, namely the whole project duration. ↩︎

-

Many people tend to shy away reading the term “experiment” as it does not seem to be a serious approach. But actually, “experiment” references the idea of the scientific experiment which is a very serious and highly successful approach under uncertainty. In a scientific experiment you set up a hypothesis that is considered to be true until falsified. Everything we consider being true in science today basically are non-falsified hypotheses. The advantage is our context is that we do not only learn from failure, but also from success. ↩︎

-

Being able to watch the response of the users means that you need to implement business metrics. Ideally you implement them on top of your infrastructure and application monitoring to be able to correlate all of the different metric types. Otherwise, if you experience a deviation from the expected business metrics you often cannot see if this is due to a business or IT problem. Implementing good monitoring and observability is a huge topic. I will probably dive deeper into that topic in some future posts. ↩︎

-

Actually, one of the hardest things in hypothesis validation is to understand, when to pivot or drop a hypothesis, because a negative answer to a question does not automatically mean that the related hypothesis is wrong. It could also be due to an unsuitable question, or improper implementation, or bad timing, or something else you have not considered. This is a big difference between customer-driven markets and scientific experiments. Hypotheses against the market are a lot fuzzier in terms that proving or falsifying them is not an exact science but always a mixture of strict numbers, intuition and luck. Each startup product manager can tell you endless stories regarding this challenge. ↩︎

Share this post

Twitter

Google+

Facebook

Reddit

LinkedIn

StumbleUpon

Pinterest

Email