The long way towards resilience - Part 5

The plateau of robustness

The long and winding road towards resilience - Part 5

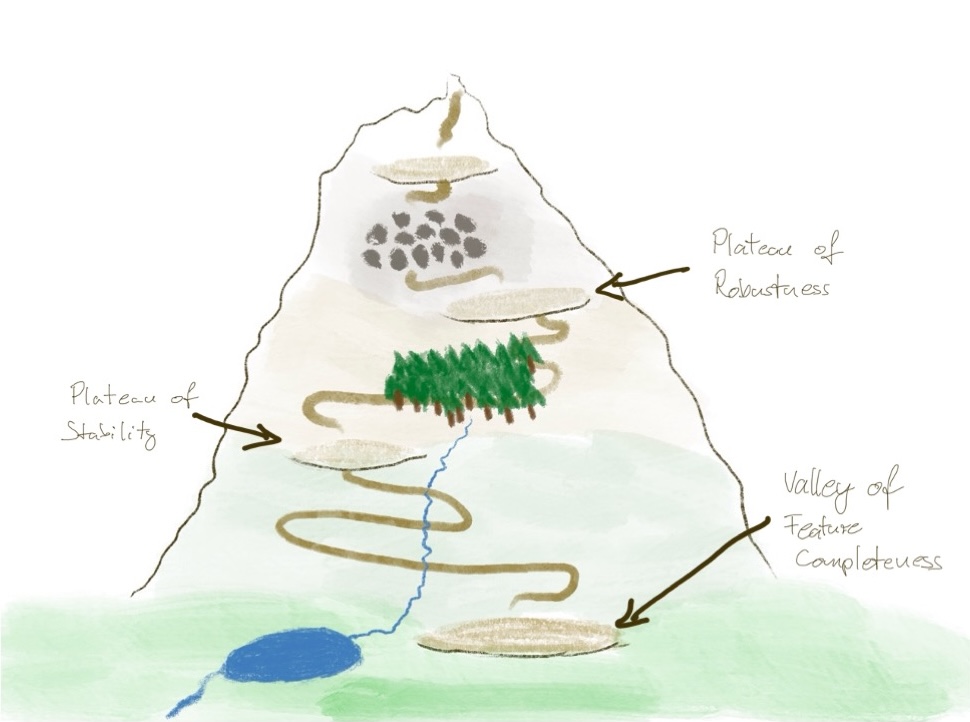

In the previous post, we discussed discussed the 100% availability trap and revisited availability. We also discussed the two realizations needed to continue our journey to the second plateau.

In this post, we will discuss what we find at the second plateau, the plateau of robustness.

The plateau of robustness

The plateau of robustness 1 is the second interim stop, companies tend to reach on their journey towards resilience.

Not so many companies can be found there because it requires a change of mindset which typically is hard and takes time. The required change is to accept that failures cannot be avoided:

We cannot avoid failures. Therefore, let us embrace them.

This does not mean that failure avoidance becomes irrelevant. It is still better in terms of availability to experience a failure once per week than once per hour. However, we do not solely rely on failure avoidance, i.e., assume we can push MTTF (Mean Time To Failure) so far that we experience a failure only once per century.

Embracing failures means to accept that the possibilities to avoid failures are limited. We can reduce their frequency but we cannot avoid them entirely. Therefore, we additionally need to focus on handling failures, on recovering from them quickly or at least mitigating them.

Evaluation of the plateau of robustness

With that being written, let us explore the plateau of robustness in more detail using the evaluation schema we already know.

Core driver

The core driver is to embrace failure.

The train of thought is: we want to maximize availability. We cannot avoid failures completely. Therefore, let us put part of our focus on increasing MTTF, however accepting we cannot increase it arbitrarily. More importantly, let us find ways to reduce MTTR (Mean Time To Recovery), to quickly detect and recover from failures or at least mitigate them, continuing operations at a defined reduced service level. 2

Leading questions

Typical leading questions are:

- What can go wrong and how can I respond to it?

- What can I do if a remote service is not available?

- How can I detect and handle invalid requests (when being called) and responses (when calling)?

- How can I fix bugs and other defects quickly?

The first question is the general, overarching question. The other questions are examples for more specific failure types.

Typical measures

The leading questions lead to a different set of typical measures:

- The probably most important additional measure is the implementation of fallbacks, i.e., sensible alternative business-level responses that are executed if the regular request processing path cannot be completed due to a technical error. This is where the measures extend to the business level in contrast to the plateau of stability where the focus was on technical-level measures only (see also the discussion below).

- Application of complete parameter checking for incoming requests as well as for responses from called systems. Even if this measure appears to be self-evident, surprisingly often it is poorly implemented. 3

- Rigorous deployment automation using CI/CD, IaC/IfC, and alike. This is not so much about repeatability than about speed. The faster, new software releases can be deployed without compromising quality, the shorter MTTR becomes in the case of a software bug.

- Minimize startup time of system components. Short startup times can significantly improve MTTR without solely being at the mercy of redundancy and failover.

- Apply thorough application and business level monitoring to detect errors early and ideally handle them before they become failures.

Note that for the plateau of robustness monitoring is still sufficient. Full-fledged observability is not yet needed because it is sufficient to quickly detect and respond to expected errors. 4

To clarify the differentiation between an error and a failure I just made (according to “Patterns for fault tolerant software” by Robert S. Hanmer):

- A fault is a defect lurking in a system that can cause an error, e.g., a software bug or a weak hard disk sector.

- An error is an incorrect system behavior that can be observed from inside the system and may cause a failure. However, an error cannot be observed from outside the system (yet). From the outside, the system still behaves according to its specification.

- A failure is an incorrect system behavior that can be observed from outside the system, i.e., the perceived system’s behavior deviates from its specification.

The idea is to detect and handle errors before they become visible from the outside, i.e., become failures. Handling errors typically happens in two ways:

- Error recovery – Fixing the cause and recovering from the error situation. Error recovery is possible only if the system has enough time, information and resources to immediately fix the problem.

- Error mitigation – Falling back to a defined reduced service level (a.k.a. graceful degradation of service). If the system does not have the time, information or resources to immediately fix the problem, it falls back to a defined reduced service level until either the problem vanishes (e.g., a temporary overload situation) or the system has enough, time information and resources to fix the problem (e.g., by utilizing a supervisor, control plane or maintenance job that satisfies the prerequisites).

If possible, the error is immediately fixed and normal operations continues without anybody observing a problem from the outside. This is the best case.

However, sometimes the system either lacks

- the time (recovery would take too long and violate the system’s SLA),

- the information (the system does not know how to fix the problem, e.g., if a called system does not respond) or

- the resources (the system does not have access to the required resources, e.g., additional compute nodes to handle an overload situation)

to immediately recover from the error.

In such a situation, it needs to gracefully degrade its service level. E.g., in an overload situation, it may shed some requests. Or if it cannot reach its database, it may still respond to queries using cached data but it does not accept write requests. But no matter how the system reduces its service level, it must be a defined degradation of service, i.e., the degraded service level must be described in the specification of the system’s behavior. Otherwise, it would be a deviation from the specified behavior, i.e., a failure.

If the system runs in a reduced service level, it will attempt to resume normal operations as quickly as possible. Typically, some kind of external supervisor, error handler, control plane or maintenance job, having access to more time, information and resources will attempt to resolve the problem and bring back the service level to full service.

Graceful degradation of service is one variant of the fallback pattern that was mentioned before as the first typical measure. The fallback pattern basically asks the question what to do if something does not work as intended, i.e., what is your plan B if plan A fails. The fallback action often is an action that offers a reduced service level from a usage perspective. However, depending on the system’s criticality and its functional design a fallback does not necessarily mean a reduced service level. It simply means executing a different path if the primary path does not work due to a technical error.

The key aspect of the fallback pattern is that the alternative action means a different behavior in terms of business logic. This means, the decision what to do in case plan A fails cannot be left to a software engineer who just writes the code for a remote call and now must handle a potential IOException or alike, the remote call may return. That poor engineer has no idea what to do in such a situation, which alternative logic to implement.

Therefore, if just left to an engineer the typical error handling is to re-throw the exception, usually wrapping it in a runtime exception (or whatever mechanism your programming language offers to create an exception that does not need to be handled explicitly at each call stack level it wanders up).

The exception is then eventually caught by the generic exception handler which is part of the top level request processing loop. There, the exception is usually just written to the log because it is also not known how to handle the exception at this level. Finally, processing continues with the next external request because stopping the service is not an option. And the users then wonder why the system silently “swallows” their requests without doing anything. 5

Therefore, implementing a fallback always means to include the business experts. The leading question is: How shall the system respond if X does not work (with X being an action that can fail due to a technical error, e.g., a failing remote call)? Based on the criticality and importance of the failing action the answers will be very different. E.g., a failing request to a recommendation engine may be silently ignored (but without skipping the rest of the processing path as sketched above) while a failing e-commerce system’s checkout process probably will be a big deal because this usually is where the company makes its money.

It also means very different development and runtime budgets that can be spent for the fallback, ranging from nothing to very big budgets. This is something, the IT department cannot (and should not) decide on its own. The business department must be included in the decision making process how to handle a failure best.

This is one of the big differences between the plateau of stability and the plateau of robustness. At the plateau of stability, the idea was that failures can be avoided completely using purely technical means. Therefore, the business department did not need to care about failures. At the plateau of robustness, it is clear that failures are inevitable and need to be embraced. This includes deciding at a business level how to handle potential failures, i.e., the business department is part of the game.

Trade-offs

The plateau of robustness approach has several trade-offs:

- It requires more effort to reach the plateau, also compared to the effort required to reach the plateau of stability from the valley.

- A change of mindset from avoiding failures to embracing failures is required which is typically the hardest part. A change of mindset is never easy and it takes time and effort.

- The impact radius grows from IT systems only to IT systems and partially processes (due to rigorous automation and the additional focus on the reduction of MTTR).

- The impact radius grows from the IT department alone to the IT department and the business department both being involved in the decision making process how to handle failures best.

- A very tight ops-dev collaboration is required to understand if the measures implemented have the desired effect and to handle failures quickly that require code changes.

- The approach works well with an economies of speed business model, i.e., in companies that focus on market feedback cycle times because it supports the need to respond quickly to external forces (the same technical and processual measures that enable quick failure handling can also be utilized to respond quickly to changing market needs and demands).

- It is possible to achieve very high availability with this approach. As a rule of thumb, several nines (higher than 99,9%) can be achieved for a single runtime component and also more than 99,9% are achievable for a group of collaborating services.

- Resilience is partially achieved – known adverse events and situations can be handled successfully.

When to use

Such a setup is good if your availability needs are high – as a rule of thumb 99,9% and more.

It is also suitable if the system is distributed internally, e.g., using a microservice approach or if a lot of other systems need to be called to complete an external request. As very high availabilities of the single runtime components can be achieved, the overall availability of the group of components involved in completing an external requests usually is still sufficient without needing to implement additional availability measures at the component group level.

When to avoid

Such a setup is not sufficient for safety-critical contexts.

It also tends to be insufficient if the availability demands are very high but the technical environment tends to be very uncertain.

It also tends to be insufficient if high innovation speed is required in highly uncertain business environments. 6

The point in all three cases is the level of uncertainty involved. Uncertainty leads to surprises, i.e., to situations not foreseen. However, the plateau of robustness only deals with expected failure modes. Surprises are not covered (see also the blind spot below). We will discuss this in more details in the next post.

Impact radius

The impact radius includes the IT systems, the IT department, the business department and processes (regarding automation and the reduction of MTTR).

Blind spot

The blind spot of this setup are the limits of perception. It works nicely for expected failure modes. However, it is not ready for unexpected failures, i.e., surprises.

Summing up

The plateau of robustness is the second interim stop on our journey towards resilience. It accepts that failures are inevitable and manifold. Therefore, it changes the attitude from avoiding failures to embracing failures.

It takes some effort to reach the plateau: A mindset change is needed. More parties are involved. Processes are partially affected. More failure modes are taken into account. Measures often need to be implemented at the application level and cannot simply utilize infrastructure and middleware means. Therefore, not so many companies have yet achieved this plateau.

It allows for very good availability, even if the underlying system is internally distributed. However, it is not suitable for safety-critical systems.

In the next post, we will discuss what it means to also prepare for surprises, the realizations needed to guide us to the next plateau and the probably biggest obstacle we will face on our journey. Stay tuned … ;)

-

Note that I use the term “stability” primarily to distinguish this approach from the robustness approach. Depending on your reasoning, you could use both terms interchangeably. However, I associate a certain degree of rigidness with “stability” while I associate “robustness” with a certain degree of flexibility. E.g., a concrete pillar is stable in that sense while a tree is robust. The concrete pillar will withstand a certain level of stress without deformation and then break while the tree will rather yield under stress and return to its original shape after the stress ends (of course, also a tree will eventually break if the stress level becomes too high but usually it can absorb and recover from stress much better than a concrete pillar of the same size). ↩︎

-

I always stress that the reduced service level, an application may fall back to as part of an error mitigation measure needs to be defined. If an error occurs and the application continues working (instead of simply crashing or ceasing to respond), it will automatically fall back to some kind of reduced service level (e.g., only respond after a couple of seconds instead of responding after less than half a second). However, if this service level is not defined as part of the possible system’s behavior specification, it is still considered a failure: The system’s behavior deviates from its specification. It is that simple. In such situations, people often oppose that their systems would not have a defined specification of their behavior. My typical response then is (a bit offhand): Good luck! If you do not provide a specification of your system’s expected behavior (at least as a SLA), your customers and users will create an expectation implicitly which will basically be: 100% available, all the time. No bugs. No failures. Never. Ever. And if it is not clear how a prior requirement implemented into the system was meant, the idea that only exists in the heads of your customers and users is the expected correct behavior. Basically, the expected behavior is the 100% availability trap to the max, including expected behavior that you never heard of because it only exists in the head of your peers. This leaves you between a rock and a hard place if any problem should occur. Your peers will insist that their idea of the system behavior is correct and you are wrong. You will fight a losing battle because a commonly agreed upon specification of the system’s behavior does not exist – only ideas in the heads of people. Therefore, my recommendation is to make the expected system behavior always explicit. You will still have discussions regarding the expected system behavior and your peers will still try to press you towards the 100% availability trap that lives in their heads. But now you made the discussion explicit and you can support your peers balancing their availability expectations and the money they are willing (or able) to spend to achieve them. ↩︎

-

If everybody would implement complete parameter checking properly, probably more than 90% of all known CVEs would not exist. ↩︎

-

Observability means you can understand and reason about the internal state and condition of a system by solely observing its externally visible signals. This includes arbitrary (ad hoc) queries on the externally observed (and collected) signals. Traditionally, this is not supported by monitoring solutions. Instead, their focus is the observation of predefined signals and raising alarms if specific thresholds are crossed. The differentiation between the topics is somewhat blurry and you find contradicting definitions (and the fact that many monitoring solution providers lately relabeled their solutions “observability solutions” does not make things easier). However, looking at the original, non-IT definitions of the terms, simply put observability is a superset of monitoring. ↩︎

-

Depending on the place in the execution path where a silent error handling of the type “log and skip the rest of the request processing” happens, it is possible that the processing already triggered several side effects while the user thinks that nothing happened. As a consequence, the user will probably trigger the request again, potentially triggering side effects multiple times. Imagine for illustration purposes, a user wants to order an article. Checkout and everything works. Then, some relatively unimportant call fails (e.g., writing some real-time statistics information). The error is logged and the rest of the request processing, including the part where the user is informed about successful completion of the checkout is skipped. In such a situation, a user would most likely press the “Buy” button again, and again, ordering the same stuff over and over again. Of course, most e-commerce systems make sure that such a behavior does not happen (anymore) because such a system behavior would massively annoy the customers. Thus, if such a silent error handling was ever implemented in the checkout process, it would have been fixed meanwhile. Still, the example is good to illustrate the problem and many request processing paths that are not as exposed as the e-commerce checkout process exist in IT systems that still have lots of such “log and skip the rest of the request processing” silent error handling routines implemented. ↩︎

-

Remember that one of the consequences of the ongoing digital transformation is that business and IT have become inseparable. This also means you cannot change anything at the business side without touching IT. Therefore, the ability and speed of IT to respond to new demands (without compromising quality) limits how fast business can respond to market movements. ↩︎

Share this post

Twitter

Google+

Facebook

Reddit

LinkedIn

StumbleUpon

Pinterest

Email