The long way towards resilience - Part 4

Availability revisited

The long and winding road towards resilience - Part 4

In the previous post, we discussed the plateau of stability, the first interim stop on the journey towards resilience – what it is good for, what its limitations are and why it is quite popular.

In this post, we will discuss the 100% availability trap, I introduced at the end of the previous post, in more detail and revisit availability. We will also discuss the realizations needed to continue the journey to the next plateau.

The 100% availability trap

I already discussed the 100% availability trap in detail in a prior blog post. Therefore, I keep it short here.

The 100% availability trap is the (still widespread) fallacy that IT systems are available 100% of the time.

It is the successor of the “Ops is responsible for availability” mindset. It is the implicit assumption that all parts of the system landscape, oneself is not responsible for, will never, ever fail.

Of course, if asked directly, everyone would agree that all parts of the system landscape can fail and that usually the time of failure cannot be predicted. Nevertheless, in practice this theoretical knowledge is widely ignored. People rarely create designs and implementations that consider potential failures of, e.g.:

- Operating systems

- Schedulers (like, e.g., Kubernetes) and alike

- Routers

- Switches

- Message busses

- Databases

- Service meshes

- Cloud services

- and especially of other applications and services.

The implicit design and implementation assumption is that everything outside the scope of the own application is available, all the time. We can see it everywhere:

- Sending messages or events to a message bus is considered sufficient to guarantee the flawless processing of the messages or events.

- Building applications that try to establish connections to all systems they collaborates with at startup. If any system is not available, the application simply crashes with an error message because this situation is not expected. 1

- Blindly relying on the perfect availability of a cloud provider’s services. E.g., when Kinesis once failed at AWS, the applications of most companies using Kinesis also failed because they were not designed with a potential failure of Kinesis (or any other AWS service) in mind. All those applications blindly assumed that Kinesis would never fail even if the SLA of Kinesis stated otherwise.

- And so on …

My personal explanation model for this widespread behavior is that it is a generalization of the deeply ingrained “Ops is responsible for availability” mindset. It appears that a “somebody else’s problem” (a.k.a. S.E.P.) attitude towards a system part’s availability very often leads to an implicit 100% availability assumption (even if the responsible party makes clear in their SLA that they do not guarantee 100% availability).

The 100% availability trap very often also leads to some kind of implicit “induction proof” regarding availability:

- Everything except the system parts, I am responsible for, is 100% available.

- Therefore, if I avoid failures in my parts using the accepted redundancy, failover and overload avoidance techniques (see the plateau of stability description in the previous post) everything is 100% available.

But the maybe most intriguing aspect of the 100% availability trap is that it completely contradicts all accepted and proven engineering practices. Pat Helland and Dave Campbell started their seminal paper “Building on Quicksand” with the sentence:

Reliable systems have always been built out of unreliable components.

This means, we expect the components used to fail and thus design and implement our systems in a way that they work reliably in spite of the failures of the components used. At least, this is the accepted and proven engineering practice.

Except for enterprise software development!

For enterprise software development, we tend to turn the practice upside down:

In enterprise software development, we build unreliable systems (applications and services) and expect the components used (infrastructure and middleware) to make them reliable.

This is the exact opposite of how reliable systems are built – an effect of the 100% availability trap which is still very widespread.

This is the reason why so many companies are stuck at the plateau of stability – simply because they have not yet overcome the 100% availability trap.

The second insight

However, the 100% availability trap is a dead end regarding resilience because as Michael Nygard put it in one of his blog posts:

Continuous partial failure is the normal state of affairs.

Contemporary complex and highly interconnected system landscapes almost always run in some kind of degraded mode, i.e., almost always some parts of them are not working as expected.

And Werner Vogels, the CTO of Amazon, used the following, only partially facetious definition to describe distributed systems:

Everything fails, all the time.

Both know a lot about distributed systems and running big, complex, distributed and highly interconnected system landscapes. Their findings are the same:

Failures are inevitable.

Basically, this realization is not new and it is not limited to distributed systems. E.g., Michael Nygard makes clear in his aforementioned post that failure is something very normal for all kinds of systems.

However, up to the plateau of stability, people still have their blinders on, trying to ignore the fact that failures are inevitable.

Therefore, the second realization needed is to accept that failures are inevitable which leads us away from avoiding failures towards embracing failures.

Availability revisited

What does that mean for availability? How can we maximize availability if failures are inevitable?

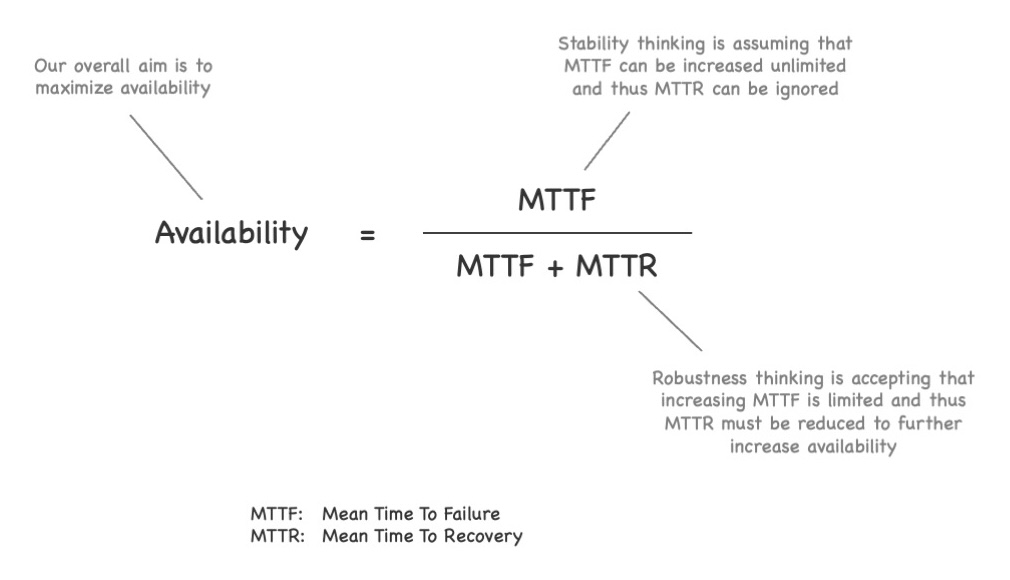

To answer these questions, let us briefly revisit the formula for availability:

Availability := MTTF / (MTTF + MTTR)

with

- MTTF: Mean Time To Failure, the mean time from the begin of normal operations until the system under observation fails, i.e., exhibits a behavior that deviates from its specification.

- MTTR: Mean Time To Recovery, the mean time from a failure until the system under observation is back to normal operations, i.e., works as specified.

Looking at this formula, we can make a few observations:

- The denominator denotes the complete time while the numerator denotes the time, the system works as intended.

- MTTF and MTTR both are positive numbers. In theory, both could also be 0. However, in practice we can safely assume both numbers are positive as long as the system under observation is capable of working according to its specification at all (i.e., MTTF is not 0).

- Based to the fact that MTTF and MTTR are both positive numbers, we can conclude that the denominator is always bigger than the numerator, i.e., the value of the fraction is a number between 0 and 1 (both excluded in practice) – or between 0% and 100% if you like percentages better.

- The bigger MTTF is compared to MTTR, the closer availability is to 1 (or 100%).

In the end, we care about availability, to bring it as close to 1 (or 100%) as possible.

At the plateau of stability, people try to avoid failures. They try to make MTTF so big that MTTR does not matter. If you would ask them, they probably would not say that they try to make MTTF infinitely big but that they attempt to make it sufficiently big. 2

Such a “sufficiently big” MTTF might be 1.000.000 hours for example. This would mean an expected uptime of more than 100 years. If you assume that it is not you but your descendants who will need to deal with a failure, you do not care a lot about MTTR – simply because you do not expect a failure to happen. It does not matter if MTTR is an hour, a day or a week. Even if MTTR would be a week, the overall availability would still be higher than 99,98%.

But as MTTF is assumed to be so big, nobody thinks about 99,98% but everyone expects the system to never fail. Welcome to the 100% availability trap!

However, if we accept that failures are inevitable, we also need to accept that MTTF for our system has an upper boundary. We cannot increase MTTF arbitrarily. We do not know the upper boundary exactly but most likely it is closer to 1.000 hours (which is a bit more than 40 days) than 1.000.000 hours.

If we assume a MTTF of 1.000 hours for our system, even a MTTR of “just” a day would be a problem because that would mean an overall availability of approx. 97,5% which is insufficient for most scenarios. A MTTR of 1.000 hours also makes clear that failures will not hit our descendants but us!

This means, simply trying to increase MTTF further and further while ignoring MTTR is not an option if we accept that failures are inevitable and thus MTTF has an upper limit. We should still try to achieve a good MTTF but to increase our overall availability further, we also need to look at our MTTR and try to reduce it.

E.g., if we bring down our MTTR from a day to an hour in our example, availability would increase to 99,9% (assuming a MTTF of 1.000 hours) which sounds a lot better than 97,5%.

To sum up: If we understand that failures are inevitable, we also realize that MTTF has an upper limit and cannot be increased arbitrarily. Thus, to achieve a desired high availability, we additionally need to reduce MTTR.

The third insight

With this insight, we then ponder the failures that could hit us and how to quickly recover from them, i.e., how to reduce our MTTR 3. This immediately leads to the third insight:

There are failure modes beyond crashes and overload situations.

At the plateau of stability, people tend to focus on crashes and overload failures and try to avoid them by all means, assuming that this is sufficient to achieve the desired availability.

If you accept that failures are inevitable, you start to look closer at the failures that hit your system. Then you immediately realize a whole plethora of failure types just waiting to challenge the robustness your system, like, e.g.:

- Crash failures

- Overload failures

- Omission failures

- Timing failures

- Response failures

- Byzantine failures

- Software bugs

- Configuration bugs

- Firmware bugs

- Security vulnerabilities

- …

All these failure modes lead to some quite nasty effects in practice, like, e.g.:

- We completely lose messages or receive partial updates.

- We receive messages multiple times.

- We run into timeouts up to a complete standstill of the whole system landscape due to call chain dependencies.

- We receive updates out-of-order which also leads to partial, out-of-sync knowledge of the involved nodes.

- We encounter split brain situations where parts of the system landscape do not see each other and their respective updates.

- We encounter persistent malfunctions of systems or system parts, e.g., due to software bugs.

- We run into metastable failures, i.e., situations where the failures persist even if the failure cause has been removed.

- We lose data or end up with corrupted data.

- We leak confidential information.

- And so on …

All these nasty things and many more can happen which is a lot more than just the effects of crash and overload failures.

E.g., I once worked with a customer who lived at the plateau of stability. They spent an enormous amount of work to avoid crash and overload failures but mostly ignored all other types of failures. Software bugs typically took them days to weeks to fix because they had a very rigid and complex software deployment procedure with lots of stages and human sign-offs involved to “make sure nothing goes wrong” (remember: at the plateau of stability, MTTR is typically ignored which means that manual deployment processes are considered fine).

I was also witness of a bug in the worker node state data replication configuration of one of their control planes. The configuration bug went unnoticed until one day, the original primary of the control plane failed. Another primary was automatically elected. So far, so good. Unfortunately, due to the bug in the replication configuration the new primary had never received any updates about the state of the worker nodes controlled. The state database of the new primary was basically a clean slate – nothing in there. As a consequence, the control plane immediately shut down all worker nodes because based on the (missing) state data of the new primary it concluded that nothing should be running.

It took them almost a week to resume normal operations because in their plateau-of-stability mindset this was something that cannot happen. Thus, they had no way to deal with it in their myriads of sophisticatedly elaborated processes and rules which all implicitly assumed that such failures simply do not happen.

But if you accept that failures are inevitable, you also start to take a closer look at those failure types. You especially ask yourself how to detect and recover from such failures faster in order to reduce MTTR.

These two realizations – that failures are inevitable and that many kinds of failures exist – lead us to our second interim stop which we will discuss in our next post.

Summing up

We discussed the 100% availability trap and learned how it is a false friend, leading us to invalid assumptions. This led us to the insight that failures are inevitable.

We then revisited availability and saw that if we want to maximize availability, it is not enough to increase MTTF but we also need to reduce MTTR. Pondering how to reduce MTTR led to the third insight that many types of failures exist that need to be taken into account.

With these two insights, we are ready to move on to the next plateau, the plateau of robustness which we will discuss in our next post. Stay tuned … ;)

-

This kind of programming leads BTW to a very nasty system landscape behavior after a severe incident that brought the whole system landscape down or at least a bigger part of it. If the administrators try to restart all the systems, basically all of them immediately shut themselves down again because one or more systems needed for collaboration are not yet up. The administrators then have to figure out the system dependency graph manually and try to bring up the systems manually one by one in the correct order. In the worst case, over time a cyclic dependency between some systems evolved and the administrators are out of luck. ↩︎

-

Based on my experiences, people at the plateau of stability do not think too much about things like MTTF and alike. Usually, you see them applying the “default measures” like some redundancy and ingress rate limiting. Based on this plus the underlying middleware and infrastructure they expect that this will do the trick for them, i.e., that they do not need to ponder availability beyond those default measures but can safely assume that failures will not happen. ↩︎

-

Hopefully, we do not only ponder which failures may hit us but we also measure failures in production and how long it takes to recover from them. ↩︎

Share this post

Twitter

Google+

Facebook

Reddit

LinkedIn

StumbleUpon

Pinterest

Email