The long way towards resilience - Part 3

The plateau of stability

The long and winding road towards resilience - Part 3

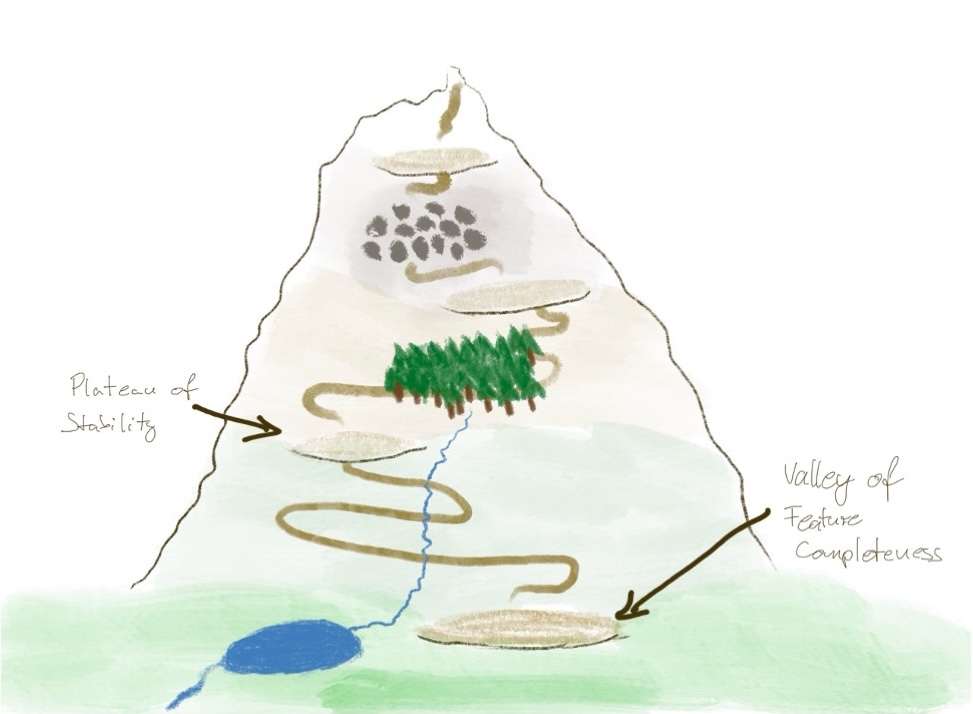

In the previous post, we discussed the valley of feature-completeness, the starting point of our prototypical journey towards resilience and we realized that such a setup usually is not advisable anymore.

In this post, we will discuss why we still see such a setup quite often in companies even if it is not suitable anymore with respect to the demands of today’s IT system landscapes. Then we will discuss which realization is needed to kick off the journey and what we will find at the first plateau.

Let us get started!

A blast from the past

Why do we still see this “Ops is responsible for availability while the rest of the organization only cares about business features” mindset so often in companies? If looking at the underlying IT system landscapes, it should be obvious that this is not adequate anymore.

To understand this phenomenon, we need to travel back to the past. Until the early 2000s, most IT system landscapes mainly consisted of monolithic applications that worked mostly in isolation. If these applications needed to share information with or trigger an activity in another application, they used offline batch updates. E.g., application A periodically created a transfer file that application B then picked up and processed.

For quite a long time, these batch updates ran in the night, once per day or less often. Later, when the pressure rose to share information more often than once per day, the batch updates ran hourly or alike.

One of the nice properties of batch updates is that they are very robust. For the sending system, it is irrelevant if the receiving system is running and vice versa. Everything is nicely isolated against failures in other systems. A failure of an encompassing system does not affect the availability of your own system. The only thing you need to take care of is that your system works as specified. For that, it is irrelevant how the encompassing systems behave.

This leaves two main sources of failures:

- Something your application is running on fails.

- The application itself fails.

The first source of failures are hardware, infrastructure or middleware components failing. These parts of the IT system landscape are the responsibility of Ops. This is where the “Ops is responsible for availability” mindset comes from. Ops is responsible for making sure the underlying operations platform works.

What about the second source of failures? Well, these are what we tend to call “software bugs”. It is the responsibility of Dev (or formerly – and sometimes still – QA) to make sure the application does not contain any critical bugs that manifest as a failure at runtime. 1

Hence, if your IT system landscape mainly consists of monolithic applications that work in isolation and only communicate with other applications via batch updates, the valley of feature-completeness is a valid place to be.

The times they are a-changin'

However, it is 2024 meanwhile and the reality of IT system landscapes changed significantly. The expectations regarding the currency of data and information increased. It is not enough anymore to propagate updates between systems once per day or even once per hour. With ever-improving network communication capabilities, the expectation basically transformed into: “Everything. Everywhere. Immediately.”.

The communication technology advancements combined with the increasing expectations resulted in a transformation of intersystem communication from offline batch interfaces to online communication. A system with new information (a.k.a. producer) does not periodically send all accrued updates at once to a system interested in that information (a.k.a. consumer). Instead, the consumer typically requests the information online, i.e., via a remote call, from the producer when it needs it.

The consumer also does not request all updates. Instead, it only asks for the specific information, it needs at a given point in time to complete its current task at hand (usually a request from a user or another system). As the consumer is able to ask for the required information at any point in time, it also does not store all the (batch) update information from the producer in its own database. Instead, the consumer requests the required information from the producer at the moment, it needs it.

Often, the consumer even does not store the requested information in its own database but simply uses it to complete the ongoing processing and then “forgets” the information. If the consumer should need the same information again, it requests it again from the producer. This is often done intentionally because the information could have changed at the producer side between the two requests. 2

Alternatively to this pull-based online request mechanism, push-based online data transfer via, e.g., event-based communication, also evolved. In this setting, the producers immediately send out any updates to the interested parties (e.g., via publish-subscribe) and it is up to the consumers to store this information in order to have it available when needed.

These two variants are the most widespread means to share information online. Of course, a lot more variants and nuances of online communication exist. Still, overall we can observe the following general evolution pattern:

- Information transfer went from offline batch to online on-demand

- Information transfer went from multi-record to single-record

The second evolution means that typically not a whole batch of information (usually containing all updates since the last batch was sent out) is sent from producer to consumer but just a single record.

The effect of this movement towards on-demand, online and typically single-record based communication between IT systems is that the IT system landscape gradually transformed from mostly isolated systems to a highly interconnected IT system landscape, continuously communicating via complex interaction patterns. In short:

Today’s system landscapes form a huge, complex, highly interconnected distributed system.

Recent evolutions like service-based architectures or near-realtime analytics only reinforce the effects of distribution.

In a blog series about resilient software design, I discussed the effects of distributed systems and how they cannot be contained solely by Ops, how they will affect Dev and even the business departments. As I laid out the reasoning in those blog posts, I will not repeat it here. If you are not familiar with the ideas why Ops alone cannot ensure the availability of a distributed system landscape, please read (or at least skim) the referenced blog series.

The first insight

However, many companies have not yet adopted to the changed reality of IT system landscapes. We see it time and again that social systems lag behind their technological advances. We see it at large scale, e.g., where at least Western societies have not yet adapted to the effects of social networks, especially the extreme echo chamber development. But we also see it at smaller scales, in our context the lack of adaption of companies to the changed reality of their IT system landscapes, treating large distributed system landscapes like a bunch of isolated systems that only communicate via offline batch updates.

If we realize that our IT system landscapes form a complex, highly distributed system and also take into account that the effects of distributed systems cannot be contained solely by Ops, this immediately leads to the first insight needed to kick off the journey towards resilience:

Ops cannot ensure availability alone.

As I wrote in the aforementioned blog series, the effects of distributed systems bubble up to the application level which in turn means that Ops alone cannot ensure availability alone anymore. There are additional failure modes that did not exist in a world consisting of isolated, monolithic systems communicating via offline batch updates which can only be handled at the application level. 3

This leads us to our first interim stop, the plateau of stability.

The plateau of stability

The plateau of stability 4 is the first interim stop, companies tend to reach on their journey towards resilience.

Many companies, I have seen, stopped their journey at this plateau. The core idea of this plateau is avoid failures by all means. The basic mindset is:

If you only push hard enough at the technical level, you can completely avoid failures and thus achieve perfect availability.

Interestingly, most people, I have seen, only focus on crash failures and overload situations while pondering how to avoid failures. Other possible failure modes are often ignored (we will come back to the other failure modes when discussing the next plateau).

However, it is now the whole IT organization that cares about availability, even if it is in terms of failure avoidance only. It is not left to Ops alone. Also Dev do their share to avoid failures. If looking a bit closer, two different collaboration modes can be observed:

- The first evolution stage of the plateau of stability usually is defined by very little collaboration between Dev and Ops. Ops do their work and provide infrastructure level tooling. Dev uses the tooling provided to do their part of the work. Dev does their part of the work, basically not interacting with Ops at all. The processes and collaboration modes are mostly as they were in the valley of feature-completeness.

- The second evolution stage is defined by a tighter collaboration between Dev and Ops. Without any feedback from production, i.e., from Ops how well their stability measures worked, Dev is mostly shooting in the dark. This tends to be ineffective. Over time, it also tends to be frustrating for the software engineers in Dev because they never get any feedback if their work did anything good or not. Therefore, a closer feedback loop between Ops and Dev is established: Dev implements stability measures and releases them to production. Ops provides feedback how well the measures worked. Dev then uses the feedback to refine the measures.

- For the sake of completeness: At the valley of feature-completeness, Ops was alone when it came to availability.

So, the plateau of stability actually consists of two sub-plateaus. However, it usually takes companies a while to realize that the lower sub-plateau is of limited effectiveness. But as it requires less change regarding collaboration modes, companies tend to stay there for quite a while before moving on to the higher sub-plateau.

Evaluation of the plateau of stability

With that preliminary remark, let us explore the plateau of stability in more detail using the same evaluation schema we used for the valley of feature-completeness.

Core driver

The core driver is to avoid failure.

The train of thought is: if failures are avoided by all means, availability will be perfect.

Leading questions

Typical leading questions are:

- How can I avoid the failure of my application/service?

- How can I detect a failure and automatically fail over to a replicated instance?

- How can I avoid an overload situation?

- How can I detect an overload situation and fix it by automatically scaling up or throttling the number of requests?

It is all about preventing failures from crashes and overload situations.

Typical measures

The typical measures regarding resilience – or (again) to be more precise: maximizing availability – are now split up between Ops and Dev: Ops typically provides the means while Dev uses them to minimize the failure probability. Typical measures are:

- A redundant service deployment, but this time configured by Dev, not Ops to make sure the own application is still available if an instance crashes.

- Application of fault-tolerance patterns like timeout detection, error checking, retry, circuit breaker and failover to detect and handle crash failures of called applications.

- Application of fault-tolerance patterns like rate limiting or back pressure to avoid overload situations or using autoscaling instead if in a (public) cloud environment.

- …

All those measures are often statically preconfigured, utilizing provided middleware whenever possible. E.g., redundancy is set up using Kubernetes means, the crash failure detection and handling is implemented using service mesh means, rate limiting is configured in the API gateway and autoscaling leverages the public cloud means. I.e., the application code is usually not touched, but the middleware and infrastructure used to run the applications are configured differently to implement the needed measures.

Also note that the focus is on technical measures only. At the plateau of stability, ensuring availability is the sole responsibility of IT. There is not any discussion with a business owner or alike. This will become relevant when we will examine the next plateau. For the moment, please just keep it in mind.

Trade-offs

The plateau of stability approach has several trade-offs:

- Coming from the valley of feature-completeness, this plateau is relatively easy to reach – especially the lower sub-plateau that does not yet incorporate any feedback loops between Dev and Ops.

- However, it delivers the best results regarding availability at the higher sub-plateau, i.e., with a feedback loop between Ops and Dev.

- For Dev, measures are relatively easy to implement because the implementation often only requires some configuration of the middleware and infrastructure provided by Ops.

- The approach works well with an economies of scale business model, i.e., in companies that focus on cost-efficiency and not on market feedback cycle times (which is still the business model of many companies) because it can be implemented without too much effort and does not respond to external forces (here: potential failure sources) quickly.

- It is possible to achieve quite good availability. As a rule of thumb, up to 3 nines (99,9%) availability for a single runtime component are possible with that approach.

- However, if a runtime component should fail, detection and recovery often takes quite long because the underlying assumption is that it is possible to avoid failures completely and thus handling of failures is an afterthought.

- Resilience is still a non-issue. The underlying assumption is that everything can be kept under control with enough effort.

When to use

Such a setup is okay if your availability needs are not too high – as a rule of thumb less than 99,9% availability for a single runtime unit 5. This makes the approach suitable for mostly monolithic applications that have very little online interaction with other applications and are allowed to have scheduled downtimes for updates and maintenance.

When to avoid

Such a setup should be avoided if the system availability needs to be higher than 99,9% as a rule of thumb.

It should also be avoided if the system is distributed internally, e.g., using a microservice approach or if a lot of other systems need to be called to complete an external request. This is because the overall availability is the product of the availabilities of all runtime components needed to complete the external request which results in a lower overall availability of the system. 6

The only exception in a service-based setup is if the services do not call each other while processing an external request (the so-called microlith approach) because in this setting the availabilities of the services (microliths) are unrelated, i.e., do not multiply up.

Such a setup is completely insufficient for safety-critical contexts.

Impact radius

The impact radius is limited to the IT systems and the IT department.

Blind spot

The blind spot of this setup is related to the notion of avoiding failures completely. I tend to call this blind spot the “100% availability trap” which I will discuss in the next post in conjunction with the insight needed to move on to the next plateau.

Summing up

The plateau of stability is the first interim stop on the journey towards resilience. It accepts that Ops cannot ensure availability alone anymore for today’s distributed system landscapes. Therefore, it extends the responsibility for avoiding failures to Dev.

The plateau is relatively easy to reach which is a reason why we quite often see companies being at this plateau. It allows for relatively good availability for single runtime components. However, it is not suitable for higher availability demands, especially not if the system is distributed internally or many systems need to interact online to process requests. It is not suitable at all for safety-critical systems.

In the next post, we will discuss the 100% availability trap in more detail and revisit availability. We will also discuss the realizations needed to continue the journey to the next plateau. Stay tuned … ;)

-

This sometimes includes some hardening of the offline batch interfaces to not fail if unexpected data arrives, i.e., data that syntactically or semantically does not fit the expectations of the receiving system. However, batch import jobs often are programs on their own, running independently from the main application. Therefore, it does not matter too much if these programs crash due to unexpected data. If this happens, usually the batch input is checked manually and the corrupted entries are fixed or removed. Afterwards, the import job is restarted. If this happens too often, usually the imports are stopped until the team maintaining the sending system has fixed the problem. Therefore, hardening the interfaces to not crash if unexpected data was sent is not a big issue with batch-oriented interface. This is very different from online interfaces where it is vital for the robustness of the system that the interfaces are able to detect and handle unexpected data without failing because the interfaces are part of the main application. ↩︎

-

I deliberately left out optimizations like caching that were introduced to mitigate some of the challenges of the unoptimized, pure concept that always requests all required information on demand from the respective providers. ↩︎

-

Actually, there are also failure modes that go beyond the application level, but we will come to them later in this blog series. ↩︎

-

Note that I use the term “stability” primarily to distinguish this approach from the robustness approach. Depending on your reasoning, you could use both terms interchangeably. However, I associate a certain degree of rigidness with “stability” while I associate “robustness” with a certain degree of flexibility. E.g., a concrete pillar is stable in that sense while a tree is robust. The concrete pillar will withstand a certain level of stress without deformation and then break while the tree will rather yield under stress and return to its original shape after the stress ends (of course, also a tree will eventually break if the stress level becomes too high but usually it can absorb and recover from stress much better than a concrete pillar of the same size). ↩︎

-

Keep in mind that availabilities multiply up, i.e., become smaller if more than a single runtime unit is required to process an external request (see also next footnote for a more detailed explanation). ↩︎

-

Availability is defined as the fraction of the overall time a system responds according to its specification. This is a number between 0 and 1 (both included) – or 0% and 100% if you prefer this notation. Multiplying two numbers that are smaller than 1 results in a number that is smaller than both numbers. If you need several runtime components, e.g., services, to process an external request because the receiving service needs to call other services to complete its request processing, all services must be available for the request to complete successfully, i.e., the individual service availabilities multiply up. E.g., if 10 services, each with an availability of 99,9%, are required to process an external request, the overall availability is 0,999^10 which is approx. 0,99, i.e., 99%. ↩︎

Share this post

Twitter

Google+

Facebook

Reddit

LinkedIn

StumbleUpon

Pinterest

Email