The long way towards resilience - Part 2

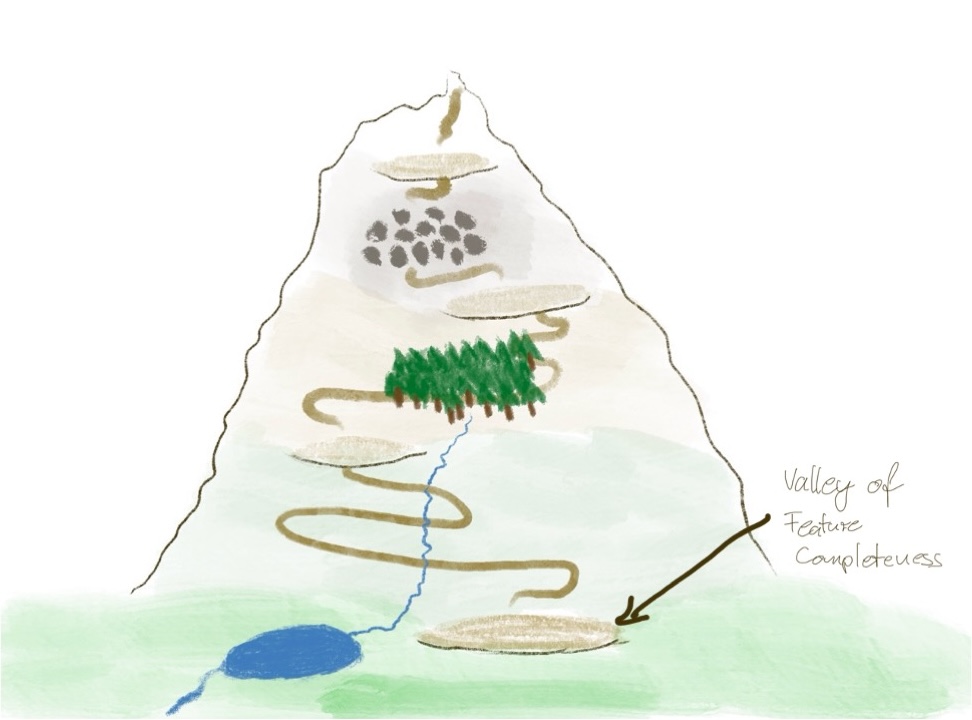

The valley of feature-completeness

The long and winding road towards resilience - Part 2

We laid the foundations for our journey towards resilience in the previous post by clarifying what resilience is. We needed to do this to create a common goal for our journey.

In this post, we will discuss the starting point of our journey and why it is not sensible anymore to linger there.

Note that I will describe a prototypical journey of a company from zero to resilience. The starting point and the interim stops are states I have seen several times on my own. Nevertheless, this is a simplified model, which means that the journey of your particular company might be different.

Maybe you cannot associate the state of your company with a single place I will describe. Maybe you find a mix of properties in your company, some from place A and some from place B. This is all perfectly fine. Reality is always messier than a good model.

The journey, I will discuss is meant to help you reasoning about the state of your company and ponder useful next steps. It provides realizations needed to travel to the next interim stop of the journey. It discusses typical measures required to get to the stop. It looks at tradeoffs and blind spots. All these things are reasoning tools. Nothing more. But also nothing less.

The mountain-climb metaphor

I will use a mountain-climb metaphor for describing the journey towards resilience. We will start in a seemingly lovely valley and stepwise climb to the peak, resting at several plateaus along the way.

I use this metaphor to make clear that the journey towards resilience is not a nice and easy ride on a paved road, window scrolled down, arm hanging out, wind in the hair and singing with a nice tune from the radio.

This is what many people would love to have, higher-level decision makers as well as software engineers. They would love to have a simple 5-bullet-point list they can easily check off and then – voilà – they are resilient. Mission accomplished! Yay!

Unfortunately, it is not that easy. Becoming resilient is hard work, especially the later steps. Therefore, I use the mountain-climb metaphor to make clear:

- It will not be easy.

- Parts of the journey will be exhausting.

- There will be obstacles along the way, we need to overcome.

However, the view from the peak is breathtaking … ;)

Change as an orthogonal topic

Many of the obstacles we need to overcome have to do with implementing change – technical change, communication change, process change and organizational change. As I have discussed in prior posts, change is always hard and messy. Additionally, as I pointed out in my need-vs-want dilemma post, it is not enough to convince people (which is hard enough already). Additionally, it is necessary to change the higher-level system, the organization and its collaboration modes, too.

Quite a lot of people then ask me for the silver bullet to implement change. Of course, they do not directly ask for a silver bullet, but basically this is what they would like to get from me. The truth is: It does not exist! Sorry about crushing your silent hopes.

There is a reason why hundreds and thousands of books about implementing change exist – and the reason is that change is hard. If a silver bullet would exist and I would know it, I would have written a book about it and live comfortably from its royalties.

Even well-known experts in the area of change like, e.g., John Kotter (who – opposed to me – live quite well from their book royalties) have not yet discovered a silver bullet. While they offer a lot of good and actionable advice, implementing change still remains hard and messy.

Overall, change management is an orthogonal topic, always required if you need to change something and thus not specific to resilience. Not being a change expert myself, I will stick to the required reasoning tools and arguments, you need on the rational side. If you are looking for advice how to implement change in your organization best, I would refer you to the change books that are available in abundance. 1

There are some more obstacles besides implementing change, we need to overcome along the way. The good part of the story is that we do not need to climb Mt. Everest. We do not need to climb up to hostile heights and barely make it to the top alive. We want to climb Mt. Resilience. It is still challenging. But it is doable.

The valley of feature-completeness

Alright, a mountain climb. We are prepared and ready to start our journey. But where do we start from and why should we leave the place we are currently at in the first place? So, let us take a look around.

I tend to call the starting place the valley of feature-completeness. The IT organizations of many companies I have seen are still there. The core property of such an organization is that

- the IT development department (Dev) is responsible for feature delivery while

- the IT operations department (Ops) is responsible for the availability of the running IT systems.

This also means that Dev and Ops are separated departments, incentivised based on different targets. Dev is incentivised based on the amount of features it implements while Ops is incentivised based on production stability, the uptime of the IT systems it runs.

Availability and reliability

If we look at resilience, we need to look at the uptime of the systems. One of the core goals of resilience is to maximize the respective system’s availability, i.e., the ability to act and respond to external stimuli according the the system’s specification (or at least conforming to sensible expectations if a formal specification does not exist). It does not matter if we talk about a technical or a non-technical system, btw.

For the sake of completeness: In the context of safety-critical system, we often rather look at reliability than availability. Reliability describes the probability that a system acts and responds correctly within a specified period of time. The difference is that availability looks the percentage of the overall time a system responds according to its specification.

E.g., for a heart-lung machine, it is essential that it works correctly during an operation. It does not matter if it is sent to maintenance for 3 weeks afterwards. It only matters that it works correctly during the operations because a malfunction of the machine during an operation would put the patient’s life at risk.

Compared with this, occasional non-availabilities in, e.g., an e-commerce system are not critical as long as they happen relatively seldom and do not take too long. Therefore, in non-safety-critical contexts – which most enterprise software related contexts are – we tend to look at availability instead of reliability. We are more interested in the overall percentage of the time the system does its job properly than in the probability that a system does not fail within a specified time frame.

As most of us tend to work in non-safety-critical environments, I will stick to availability throughout the discussion of the journey towards resilience. However, keep in mind that in safety-critical environments, we are usually more interested in reliability. 2

Availability as S.E.P.

Coming back to the valley of feature-completeness, we can observe that the whole Dev budget is reserved for implementing business features. Availability is “outsourced” to Ops. From a Dev perspective, availability is a non-issue, a S.E.P. (somebody else’s problem).

Evaluation schema

Before assessing if such a separation of concerns is a viable concept regarding resilience, let us first do a structured evaluation of the valley of feature-completeness with a special focus on the parties beyond Ops (as Ops always will have a genuine interest in resilience – or at least high availability). We will use the same evaluation schema for all stops of our journey, looking at the following aspects:

- Core driver – what is the primary goal regarding resilience?

- Leading questions – what are the questions driving the measures?

- Typical measures – examples of measures used to implement the respective level of resilience

- Trade-offs – the pros and the cons of the respective stop

- When to use – when is it fine to use such an approach regarding resilience

- When to avoid – when we must not stop our journey at the given stop

- Impact radius – who and what is affected by the required resilience-related measures

- Blind spot – what the core driver misses and triggers the journey to the next stop when recognized

Evaluation of the valley of feature-completeness

Using this evaluation schema, we can evaluate the valley of feature completeness in a structured way:

Core driver

The core driver is to maximize business feature throughput.

The whole organization considers IT solely as a means to implement the IT aspects of their business domain ideas, i.e., IT is typically considered a cost center. Consequently, IT is all about implementing as many features as possible at the lowest cost possible.

Ops is expected to guarantee high runtime availability of the IT systems but nobody cares how they are doing it. Availability is considered a S.E.P. – or more precisely: an Ops problem.

Leading questions

Typical leading questions are:

- Are the business requirements implemented correctly?

- How can we implement features faster?

It is all about minimizing cost per feature while preserving functional correctness. Feature costs are minimized within the boundaries of the encompassing project which is the typical software development organization form. These local optimizations

- neither take the effects of the cost optimization for other parts of the system landscape that are not part of the project into account

- nor take the effects for future maintenance and evolution of the changed system parts beyond the project end date into account

- nor take any runtime-related properties like availability, operability, observability, security, scalability, performance, recoverability, resource usage, and alike into account unless they are directly related to the respective business feature.

Typical measures

The measures regarding resilience – or to be more precise: maximizing availability – all take place in Ops land as the rest of the organization ignores this topic and simply expects Ops to take care of it. Typical measures are:

- Providing redundant hardware and infrastructure components, including HA (high-availability) hardware

- Running the software systems redundantly behind a load balancer with failover or inside a cluster solution with failover

- Strict handover rules for dev artifacts as Ops (legitimately) does not trust what Dev “throws over the wall”

- Long pre-production testing phases to check for potential production problems – again because Ops (legitimately) does not trust what Dev “throws over the wall”

- …

Overall: Everything ops can influence.

Trade-offs

The basic trade-offs of such a setting are:

- It is comparatively nice for Dev because they have less to take care of. Especially, they can ignore all relevant runtime properties of the software and solely focus on implementing business logic (which can be stressful enough depending on the given environment).

- It is not so nice for Ops because they are expected to run software reliably that was created without availability nor any other relevant runtime property in mind.

- Resilience is considered a non-issue. The underlying assumption is that everything is always under control.

When to use

Such a setup is okay if you are in a non-safety-critical context and your IT system landscape consists mainly of monolithic, isolated systems that communicate via batch interfaces with each other. I will discuss this evaluation in more detail in the next post in conjunction with the discussion of the blind spot of this setup.

When to avoid

Such a setup should be avoided if your IT system landscape consists of many highly interconnected systems that communicate with each other via online interfaces – which is the default for today’s IT system landscapes.

It is insufficient for settings that use internally distributed systems (keywords: service-based architectures, microservices) and completely insufficient for safety-critical contexts.

I will also discuss this evaluation in more detail in the next post in conjunction with the discussion of the blind spot of this setup.

Impact radius

The impact radius of the resilience-related (or more accurately: availability-related) measures is limited to the Ops department and the part of the IT system landscape Ops can influence.

Blind spot

In short, the blind spot of this setup is everything besides business features from a non-Ops perspective – which raises the question if “blind spot” is the appropriate term here or if we should rather call it a “blind area with a little non-blind spot”. This setup especially ignores the reality of today’s system landscapes. It also ignores resilience and treats availability as S.E.P. (or more accurately: an Ops problem).

Summing up

When looking at the evaluation of the valley of feature-completeness, we realize that this setup is not suitable for nearly all IT system landscapes of today. It completely ignores resilience and makes availability a sole Ops problem while the rest of the organization completely ignores it. Even worse, their acting often is counterproductive regarding availability.

The question is why we still see such a setup quite often even if it is not suitable anymore with respect to the demands of today’s IT system landscapes.

We will discuss this question in more detail in the next post. We will also discuss how the realization that this approach is not sufficient anymore leads us to the first plateau of our journey. And we will discuss what we find at the first plateau. Stay tuned … ;)

-

Personally, besides the change books from the aforementioned John Kotter, I can recommend the books “Fearless Change: Patterns for Introducing New Ideas” and “More Fearless Change: Strategies for Making Your Ideas Happen” by Mary Lynn Manns and Linda Rising. They nicely organized useful change practices along a change process using a pattern approach. ↩︎

-

If a safety-critical system must work 24x7, like, e.g., a power-plant, we are interested in both, reliability and availability. ↩︎

Share this post

Twitter

Google+

Facebook

Reddit

LinkedIn

StumbleUpon

Pinterest

Email