The reusability fallacy - Part 4

Why the reusability promise does not work

The reusability fallacy – Part 4

In the previous part of this series I discussed why reusability is a false friend in distributed systems and thus should not be used to sell distributed architectural approaches. Additionally, I discussed the difference between “usable” and “reusable” assets and why you should strive for “usability” in distributed approaches like, e.g., microservices.

In this final part of this little series, I will briefly summarize what we have discussed so far and then then give a few practical recommendations, when and where to strive for reusability, when to avoid it and what to do instead.

Little spoiler upfront: Personally I think reusability has a huge value! But if you use it for the wrong reasons in the wrong places, you end up in a very bad place where a lot of innocent people suffer for a long time because you made that wrong decisions.

The first three parts of this series were about debunking those wrong reasonings – hoping that it will help you to avert this type of wrong decision better in the future. This final post will try to look at reusability from a more general perspective, including the places where we definitely want to foster reuse.

What we have learned so far

In the first part of the series, I tried to deconstruct the fallacy that we can save lots of money from a more efficient production process by using standardized, reusable parts. We have seen that software development is all about design. The design is only completed after the last line of code is written.

The actual production process, i.e., creating the executable program, is so efficient and cheap that there is nothing left to save. Thus, all the reusability approaches that take concepts from the physical world and try to apply them to software development do not work. Regarding reusability, we are left with efficiency gains we can achieve by using reusable parts in the design process.

Software development is all about design.

Reusability approaches that are built on the idea of optimizing the production process do not work.

In the second post, we have looked at the costs of making software assets reusable and learned that making an asset reusable means multiple times the efforts of creating it for a single purpose. We have also seen that shirking the costs is not an option.

Creating a reusable asset means multiple times the effort of creating an asset for a single purpose.

You need to take the extra costs into account when creating a business case based on reusability.

Additionally, we have looked at properties of a software asset that either promote or inhibit reusability. Based on that we have seen that most of the architectural paradigms that are sold with the promise to amortize via reusability aim at the places where reusability does not pay. Therefore, those business cases do not work.

Introducing a new architectural paradigm with reusability as business case does not pay.

On the other hand, all solutions that expose good reusability suitability properties already exist as reusable assets, e.g., as part of programming language ecosystems or OSS solutions.

Everything worth being implemented as a reusable solution already exists as reusable asset.

Finally, we have seen that we do not need any new architectural paradigms to implement reusability because all we need for that is modularization – which is available for more than 60 years meanwhile.

In the third post, we have looked at the price of reusability in distributed systems because most paradigms that are sold with the promise to amortize via reusability are distributed (e.g., DCE, CORBA, EJB, DCOM, SOA or microservices).

We have seen that reusability in distributed systems is a false friend. Reusability leads to very tight coupling, which in conjunction with the imponderabilities of remote communication leads to brittle and slow solutions. Instead, reusability should be implemented inside process boundaries, where needed (e.g., using libraries) because tight coupling inside a process boundary does not affect availability and response behavior at runtime. 1

Reusability in distributed system design is a false friend. Avoid it.

Try to address reusability inside process boundaries instead.

We also discussed the distinction between “usability” (based on functional independence) and “reusability” (based on composition) which are often mixed in arbitrary ways. The problem of mixing them in distributed system design is that they lead to very different runtime properties, being rather undesirable for “reusable” designs.

Aim for usability (i.e., functional independence) across process boundaries.

Effects of selling reusability for the wrong reasons

As I wrote in the beginning, all the things I wrote in the first three parts of this series were about debunking wrong reasonings that are often used to push through some new architectural paradigm – typically based on the promise to amortize via reusability.

If such a reasoning succeeds, i.e., if a new paradigm is implemented, it typically requires substantial upfront investments. The sponsors, paying these upfront investments, naturally want to see the second part of the business case happen, i.e. the promised return on their investments due to massive reuse.

As a result, the advocates of the new paradigm are under pressure to deliver value based on reuse because they promised to do so. Hence, they will try to push reuse hard, no matter if it makes sense or not. They promised it. They have to deliver.

This usually leads to a very bad situation for all the people who are affected by the solution:

- The developers suffer from all the downsides of a (typically distributed) monolith that this approach will result in.

- The operations engineers suffer from a solution that exposes bad availability and response time properties at runtime.

- The QA engineers suffer from tight dependencies between the parts of a solution which makes it hard to test them independently.

- The users suffer from a solution that exposes bad availability and slow response times.

- The business department suffers from slow feature and change implementation due to the effects of the tight coupling.

- The original sponsors suffer from a non-functioning business case.

- And so on …

A lot of people suffer just because someone tried to sell a new architectural paradigm using the wrong reasoning. As people suffering is always a bad thing, we should try hard to avoid this.

When not to go for reusability

Having all that in mind, I will try to give a few recommendation now when to aim for reusability, when not to do it and what to do instead. Let us first look at when not to go for reusability.

Advice #1: Do not use reusability to justify the introduction of a new architectural paradigm.

We had it before: do not use reusability to justify the introduction of a new architectural paradigm. It neither works nor does it make sense. We do not need any new paradigm because modularization – which we have in place for more than 60 years – gives us all we need to implement reuse. Additionally, using reuse as part of the justification (and the underlying business case) usually results in bad system designs, especially if the system is distributed.

A illustrative (negative) example is the concept of layered SOA that arose from reusability thinking 2. The reasoning was like: “We need those entities all over the place. Thus, let us create reusable services for them. The same is true for processes. We also have subprocesses that we use in different places. Thus, let us create the subprocesses reusing the data services and then processes reusing the subprocesses. Finally, we create user interaction services which reuse the process services as they need it. This way, we create high business agility on the user interaction layer by simply recomposing all those reusable services as we need them.”

We know that recomposition of standardized business functionality never works this way, even though some people do not cease to dream of it. This dream is many years old and never worked as imagined. There are a lot of reasons for that which are outside the scope of this post.

But even if we neglect the fact the the desired recomposition does not work, we immediately face a much more obvious problem: For an interface service to work, a lot of underlying services need to be available, i.e., respond timely and correct. If we assume an availability of 99,5% (including planned downtimes, e.g., for deployments) per service involved – which is a non-trivial availability already – we are down to approx. 95% availability for the interface service if it depends on 10 services to fulfill its tasks.

10 services is not a high number in such a design when considering the breakdown structure, i.e., process services, subprocess services and data services. Still, 1 out of 20 requests will fail in average. If the interface service should require 50 services (because someone took reusability really serious), we are down to 75% availability, i.e. 1 out of 4 requests will fail in average.

That is exactly what those layered SOA initiatives experienced. As a countermeasure they introduced Enterprise Service Buses and Process Engines – just to mitigate the issues they have created through a design based on reusability. Those central control instances then created new types of problems like business logic creep in the control instances, making them and the supporting teams a bottleneck, slowing down progress and burying the dream of better business agility for good. 3

Advice #2: Be wary of reusability if the problem you try to solve does not exhibit good reusability suitability properties.

Additionally, we should be wary of reusability if the problem we try to solve does not exhibit good reusability suitability properties. We discussed this in the second post. Therefore, I will not repeat it here.

What to do instead

If we encounter a situation where reusability is not a good idea, what should we rather do?

Advice #3: On a business case level, better argue with benefits like “increased business agility” or “better technology obsolescence management”, if you want to introduce a a distributed modularization paradigm like microservices.

If you want to introduce a new architectural paradigm, rather build the business case on “increased business agility”, i.e., that you will be able to move faster and that different parts of the business can move independently. In dynamic markets with post-industrial properties, this will give you an important competitive advantage that you can use for a business case reasoning.

You can also argue with better options to counter technology obsolescence if you would like to introduce a distributed modularization paradigm like, e.g., microservices. This is more of a risk management reasoning: by splitting the application landscape in smaller chunks that are mostly independent (if designed right) and collaborate via defined interfaces only, you can phase out older technologies and test new technologies at a much lower risk – which in turn also means a cost reduction as soon as you assess the risks.

Corollary to advice #3: Do not try to introduce a distributed modularization paradigm like microservices if cost reduction is your sole driver.

Yet, if you are only interested in cost reduction, you should better avoid introducing any new distributed modularization paradigm. All those paradigms, no matter if microservices or anything else, require additional care in development and production to not backfire heavily. If the people involved are not used to it, they need training and time to master the new challenges of the new paradigm. If you only look for the penny you can save today, you will not be willing to make those investments. As a consequence, the new paradigm most likely will create more harm than value.

Advice #4: On a design level, prefer “usability” (functional independence) over “reusability” (composition) in distributed systems.

On a design level, we already discussed the concept of “usability” in the third part of this series. If you go for a distributed modularization approach like microservices and you try to organize your business functionality using those types of modules, usability gives you a good way to harvest the benefits of the paradigm without suffering from the downsides of tight coupling in distributed systems due to compositional reusability.

As I implied in the third part already, creating a good design for “usability” is not trivial and beyond the scope of this post. I will pick up that topic in some future posts. 4

Mind the context – avoid the dogma

Always have in mind that especially the design related advices are heuristics, i.e., rules of thumb that should help you to make better decisions. They are no carved in stone! Thus, please do not treat them in a dogmatic way.

Corollary to advices #2 and #4: Design advices are heuristics, not a dogma. They are not a replacement for thinking.

You will encounter situations where you might need compositional reusability across service boundaries and that can be okay. If you know what you are doing, if you are aware of the consequences of your decision, chances are that you will deal with them in a sensible way.

For example, I once had an engineer in one of my workshops who told me that they had a service-based architecture. The also did a lot of machine learning and wanted to speed that up via a GPU grid. They decided to put the grid behind a service facade that can be reused from arbitrary services to make the grid easily accessible for different user groups. So, the goal of this reusable asset was to provide and control access to a specific type of resource – a perfectly valid use case.

I do not know exactly how they implemented it. But taking the knowledge from this blog series, we see different options:

- We can reuse the GPU grid service in a traditional, compositional way, i.e., the using service is tightly coupled to the GPU service. If the calling service cannot do anything useful while waiting for the GPU grid service to return, it can be a valid decision to accept the availability impact that results from the tight coupling. We still should try to avoid that human users also have to wait.

- We can use the GPU grid service in a collaborative, functional independent way. We split the whole use case in three services. The first service accepts the inputs and hands them over to the GPU service, ideally in an asynchronous way, e.g., via a queue 5. The GPU grid service then does the processing as soon as it has the capacity for it and passes the results to another service, again ideally in an asynchronous way. The third service then picks up the processing results and consumes them.

Both designs are valid. The first is based on traditional reusability, the second is based on usability in terms of functional independence. The different designs will lead to different services with different interfaces and different communication patterns. While the general rule of thumb points toward the second option, there can be contexts where the first option makes more sense.

The key point is to make the decision knowingly and to deal with the consequences in a sensible way.

Finally, what is left for reusability

From all that I have written in this series, you might have got the impression that I see little value in reusability. So much text to convince people not to go for reusability. To be honest, quite the opposite is true. Personally, I think that reusability has a huge value that cannot be overestimated – if you use it right.

To explain the value of reusability a bit: assume you need to build a not too challenging solution, e.g., a small e-commerce web shop that needs to run in a desktop browser and on mobile devices. If we leave aside for a moment that we can create such solutions today with tools like Shopify in a day or two, then this would be a task for a small team (5-7 persons) for 3-6 months.

But if you would need to start at the level of a bare programming language (3GL, turing-complete, no standard libraries, no ecosystem), this would be a daunting task: Probably a 100 or more people working for years, not knowing if they will succeed at all.

This enormous difference in productivity is the merit of reusability. If you needed string processing in C++ in the early 1990s, you needed to implement it yourself. Today, you start a whole HTTP server and more with a single line of code. This is reusability!

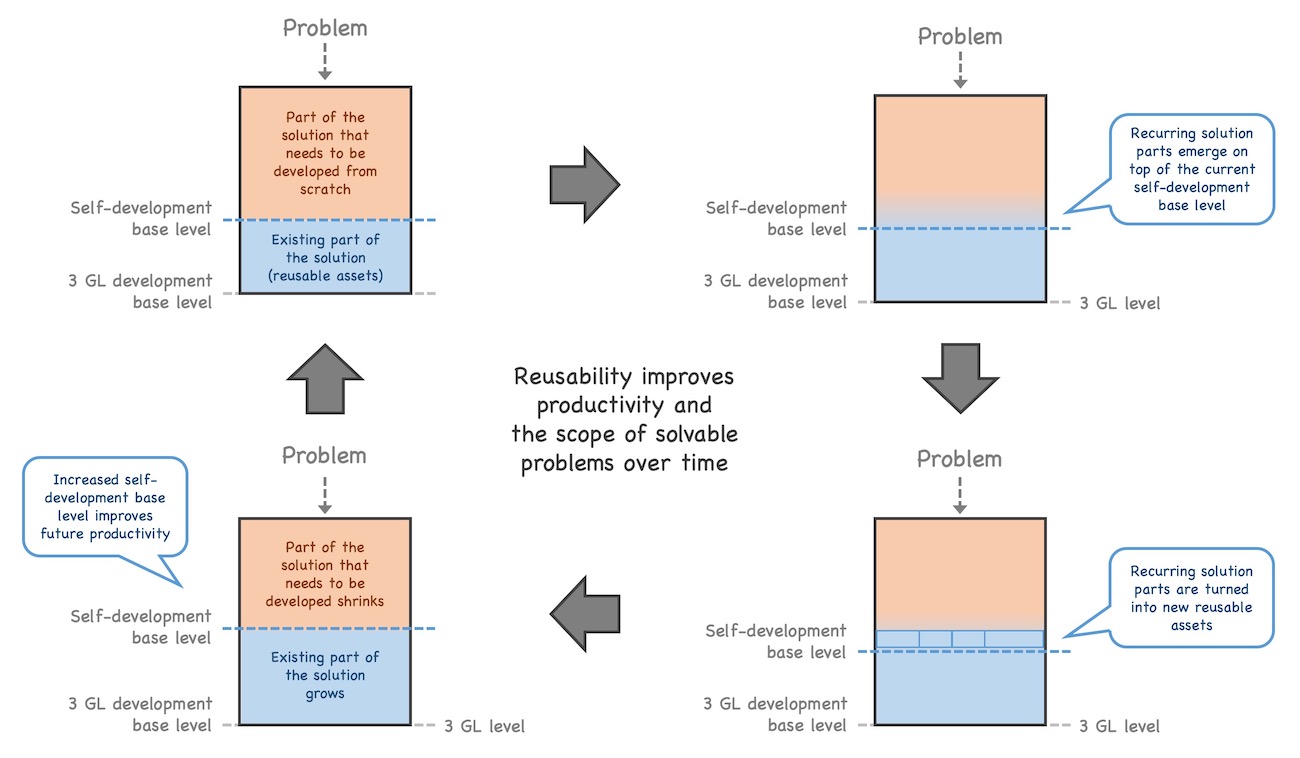

Reusable assets provide the foundation for continuously raising the ground level that we start with if we need to solve a problem – from the string class to the HTTP server and beyond.

Advice #5: Try to use existing reusable assets to raise your productivity. Do not try to reinvent the wheel.

Thus, to repeat my claim: Reusability has a huge value that cannot be overestimated and I do not want to miss it (I never want to have to write a string class again).

Advice #6: If you solve a problem that has good reusability suitability properties and is needed in many places, consider creating a reusable asset (if you can afford to pay the extra costs).

If you need to solve a problem that has good reusability suitability properties and that would support a lot of engineers who all need to solve the same problem, consider creating a reusable asset – especially if the reusability dependency can be kept inside a process boundary.

If you are in such a situation and can afford to go the extra mile to make your asset reusable: go for it! Just make sure that it is really worth the effort before you invest the extra time and money.

Bonus advice: Think about reusability in other areas of software engineering, e.g., on the level of ideas.

Reusability also has a huge value in other places than software design and development. E.g., David Heath came up with an IMO very valuable idea in a short discussion we had on Twitter:

This is another area in the domain of software engineering where the idea of reusability promises a huge value. Patterns are a step in that direction. Yet, they are not sufficient. Also a culture of continuous learning and improvement is needed to implement it. I will not dive deeper into it here, but I will probably pick that idea up in some later posts.

Moving on

This blog series has become longer than i have initially expected. While I am sure that I still missed some relevant aspects of the discussion, I think I was able to debunk some of the most popular fallacies regarding reusability.

Yet, this was not an argument against reusability. It was an argument against using it in the wrong places and for the wrong reasons.

We need reusability as continuous productivity driver: Building on the (reusable) building blocks of today, we solve problems of all kinds. Over time, we learn that some of the problems we solve pop up over and over again. We then create reusable assets capturing a general solution for these types of problems. With these assets we start solving problems one level up on the reusability and productivity ladder – and the story will repeat.

This is a great evolution and we definitely want reusability for that. But we do not need any hyped architectural paradigms based on hollow business case promises for that. We already have everything in place for a long time that we need to implement great reusable assets.

Those architectural paradigms, usually based on distributed modularization (like microservices) also have a value. So, it is not that they should be avoided categorically. Their value is just not based on reusability.

I will leave it here and hope that this little series gave you some ideas to ponder – and some arguments for the inevitable next discussion about reusability in the wrong places … ;)

-

Of course, tight coupling might expose some undesired properties at development time, but that is a different story I might discuss in another post. ↩︎

-

Additionally, the usual misconceptions regarding “layered architecture” did their stint. I will discuss why layered architecture typically is a misinterpretation of the layer pattern in a later post. ↩︎

-

We meanwhile see similar patterns emerge in the Microservices domain. The rise of service meshes, API management tools and – surprise! – process engines point in the same direction again. We still have to see where it will end. ↩︎

-

If you do not want to wait for those posts, you might want to have a look at this slide deck. You can also find a recording of an abridged version of the talk here (I only had 45 min for a 60 min talk, thus I had to cut a few corners). People who understand German alternatively can watch an early complete version of the talk here. ↩︎

-

Note that “queue” means a logical queue here, i.e., something that is able to buffer data and works in a FIFO fashion. There are lots of options to implement such a queue on a technical level. A dedicated message queue system is just one of them. ↩︎

Share this post

Twitter

Google+

Facebook

Reddit

LinkedIn

StumbleUpon

Pinterest

Email