The public cloud revolution - Part 1

Preparing the revolution

The public cloud revolution - Part 1

Discussing with other people, I often realize that many of them have not yet understood how much of a revolution public cloud is. In my previous posts, I already discussed why I think cloud-ready is not a viable strategy and the disruption delay which also affects cloud computing. Here I would like to add a different perspective.

My claim is: Public cloud, used right, is a game changer on the business side.

Whoa, that is a strong statement, you might say.

And admittedly, it is – especially without any further explanation. Thus, let me unpack this statement.

A key observation is that the evolution of public cloud computing was sort of an exponential development – starting relatively slow, but gaining more and more momentum over time. For quite a while, public cloud mostly looked like an infrastructure topic, competing with traditional outsourcers. But due to the ever-growing accumulation of service layers building on each other, public cloud eventually developed a very different impact, leading to my claim.

Let me walk you through the cloud evolution of the last 15 years and see how it built up over time. As it would have become a quite long blog post, I decided to split it up in two posts:

- Prelude – preparing the revolution (this post)

- Main movement – the revolution itself

The remainder of this post will discuss the first years of the public cloud evolution which was necessary to trigger the revolution. I will use Wardley maps to illustrate the different stages.

Still, be aware that this description is a bit idealized. In reality the different stages were not that clearly distinguishable. And to be frank, I also doubt the big public cloud providers had this revolution in mind when they started their platforms. More likely, they only realized the potential of what they created mid-way.

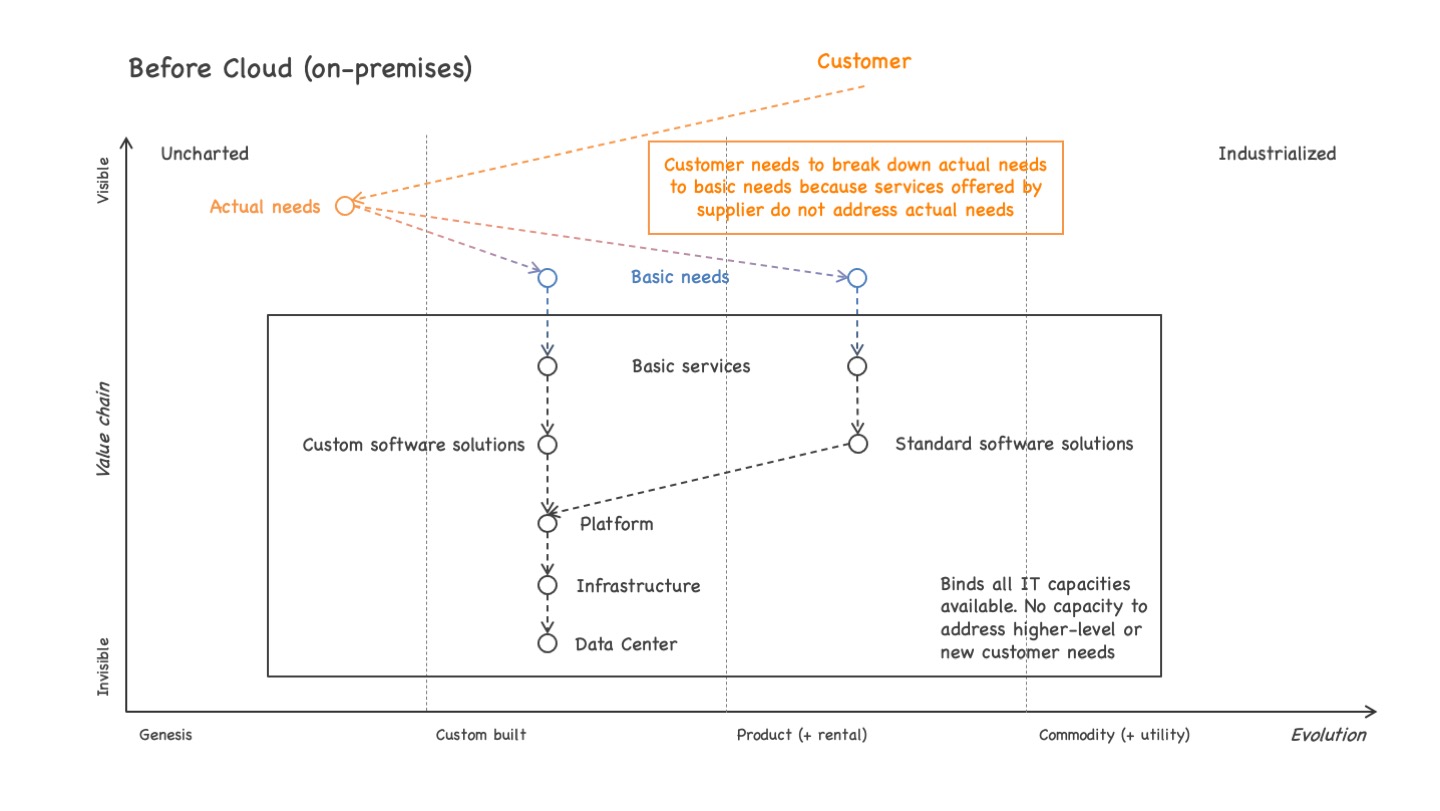

Before public cloud (on-premises)

The basic value chain, I laid out, goes like this:

- As (almost) always on Wardley maps it starts on top with users and their needs.

- These needs are mapped to services, a supplier (your company) offers.

- The (IT side of the) services are implemented using either custom or standard software solutions.

- The software solutions run on a runtime platform (which often also supports development and testing).

- The platform in turn runs on some IT infrastructure.

- The infrastructure runs in a data center.

Even if this relatively coarse-grained value chain leaves out many potentially interesting details, it is accurate enough for its purpose, to explain the public cloud revolution.

Before moving on, it is important to note one more vital detail: The breakdown of the actual user needs to basic user needs supported by the suppliers. Very often suppliers offer their products and services based on their internal organization models. E.g., in an insurance, almost everything is organized around the concept of an insurance policy. Also customer interactions typically start with asking for the related policy.

While this is an efficient way for an company to organize their products, services and processes, it often does not match the actual needs of their customers. Their customers are typically interested in some kind of higher-level service.

E.g., in the case of insurance, customers typically care about safety regarding living, travel or alike. They do not really care about an insurance policy. They only take out insurance to satisfy their actual need, the aforementioned safety in specific life situations. Insurance policies are just a necessary evil for them, nothing they really ask for. 1

Still, as suppliers usually only offer services on a lower level, matching their internal organization model, the customers need to break down their actual needs to lower-level basic needs and try to satisfy them combining several services from several suppliers.

On the other hand, many companies are not stupid. They know their customers would love to have higher-level services offered that match their actual needs. Still, easier said than done.

Especially in IT, all the capacity available is bound to delivering the services in their existing form. In an on-premises context, the whole IT stack from data center up to the software solutions powering the service offerings are either custom built or bought (standard software) – and also the standard software needs to be customized and maintained as well as operated. All this typically binds all the IT capacity available.

In such a setting it is very hard if not impossible to free any IT capacity needed to design, implement and run higher-level services – or at least it is inhibiting expensive.

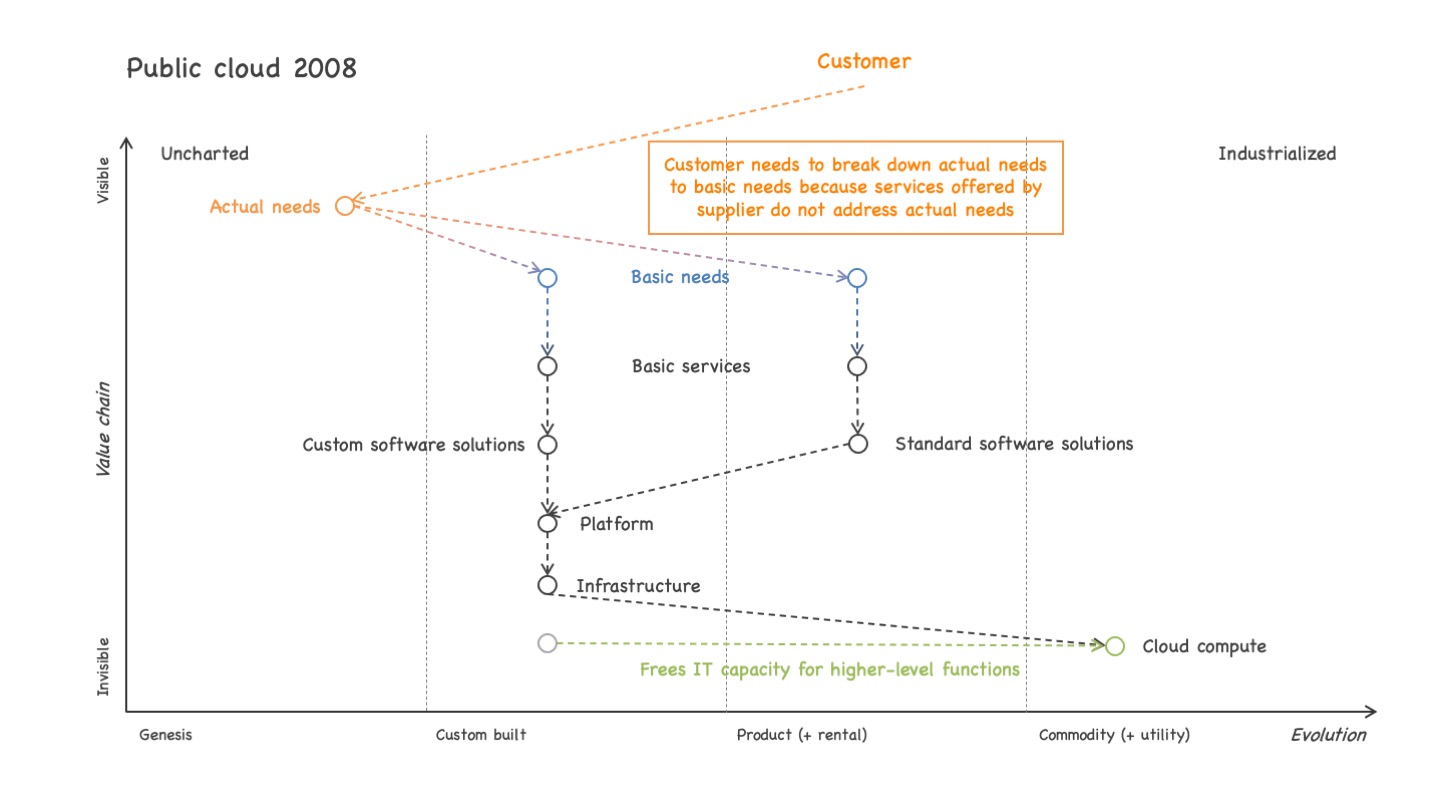

Public cloud ~2008

Around 2008 (give or take a year or two), public cloud mostly offered compute, storage and a bit of network. In terms of the AWS services, we talk about services like EC2, S3, EBS and alike 2. This offered the possibility to move the data center hardware to the public cloud. Instead of buying (or leasing) new hardware, it was possible to rent it as a cloud service.

This removed the lowest infrastructure level close to the hardware and freed some IT capacity for higher-level functions that was needed before for selecting and buying hardware, installing it, setting it up, etc. 3. Everything above that level, starting with most required infrastructure components (see next section) still needed to be managed by the IT department, still blocking most of their capacity.

Also, traditional outsourcers already offered data center outsourcing before 2008. Usually, the response times were a lot longer, i.e., a new server was not up and running a minute or two after requesting it. Instead it usually took days, weeks or even months. But as the typical software delivery models did not require faster hardware provisioning yet, a revolution was not yet on the horizon.

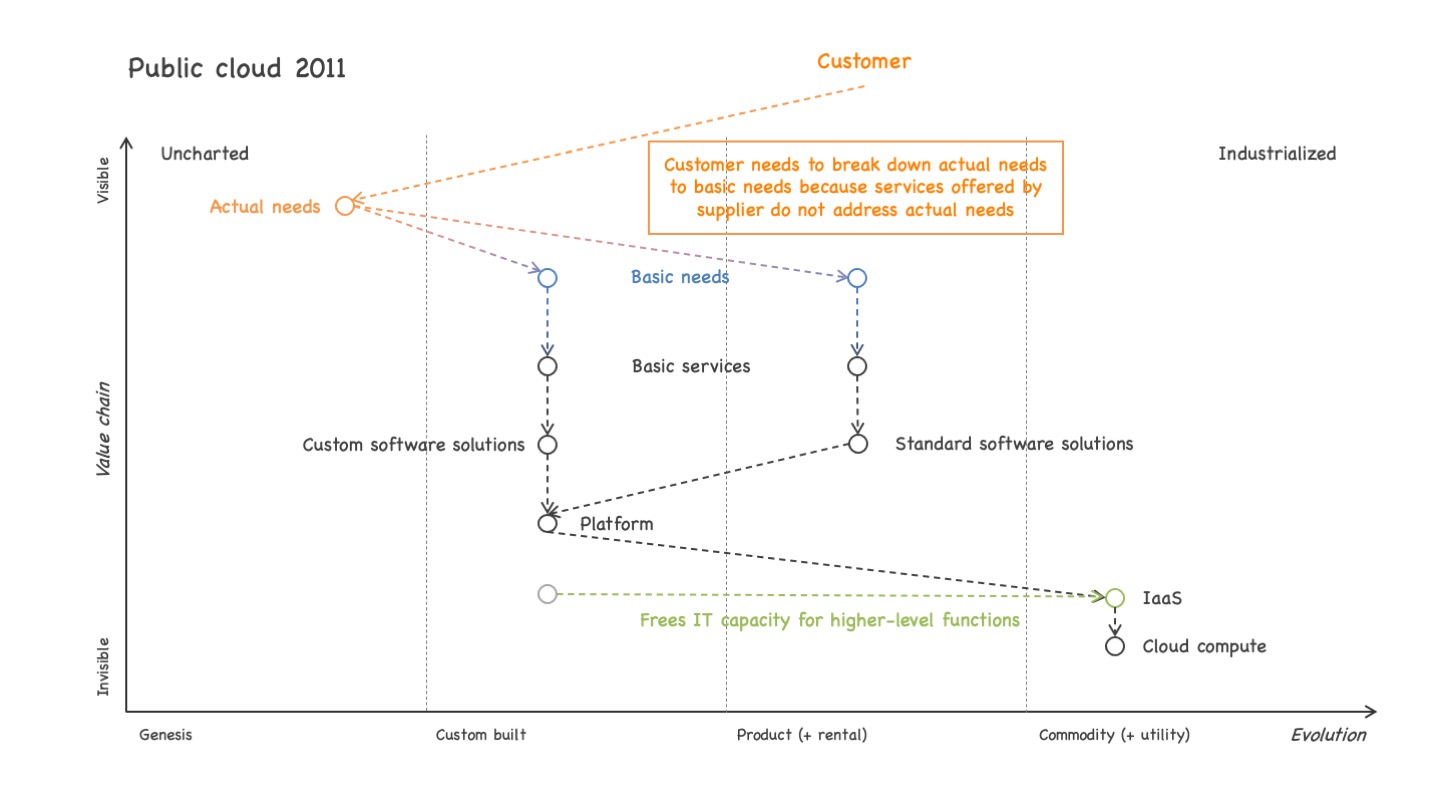

Public cloud ~2011

Around 2011, the public cloud offerings were complete enough on an infrastructure level that it was possible to go for full IaaS. Not only simple compute and storage was available on demand, but also more advanced managed infrastructure components like different kinds of databases, several smarter networking and communication services (e.g., load balancing, routing, messaging, CDN, …), and more.

This made it possible to move the responsibility for managing most of the infrastructure components to the public cloud provider of choice. This again freed IT capacity for higher-level functions that was needed before to set up and run all these infrastructure services.

Still, the picture was far from being complete. Some traditional outsources also offered similar kinds of services, usually not that complete and not in such an elastic fashion. But for many companies it still was good enough and thus a revolution was still far away.

Stuck in the IaaS level

Interestingly enough, IaaS is still the perception of public cloud, I experience quite often when discussing the topic with IT decision makers. I am not completely sure why it is this way.

Maybe, quite some of the decision makers had made up their minds regarding public cloud computing until that point in time (or a bit later, see the next section about PaaS) and then stopped watching the evolution as they did not see any relevant improvement potential using public cloud.

Another relevant factor probably is the Cloud Native Computing Foundation that basically reduces “cloud native” to IaaS using containers as compute building blocks. 4

Additionally, at least in Germany where I live, many decision makers decided to go for private cloud solutions for various reasons. As all private cloud offerings available basically stop at the IaaS or PaaS level at best, it might also have limited the perception of the respective decision makers.

And finally, I see a lot of discussions regarding “vendor lock-in” in the context of public cloud. I discussed in a prior post why I think this whole lock-in discussion is ill-directed. Still, if you follow that idea you need to limit yourself to the services all public cloud providers offer alike and which you ideally also can provide on-premises in case of need – which leaves you with the IaaS level as the lowest common denominator.

But then again, I am not completely sure why many decision makers have not yet understood the effects of public cloud beyond IaaS or PaaS.

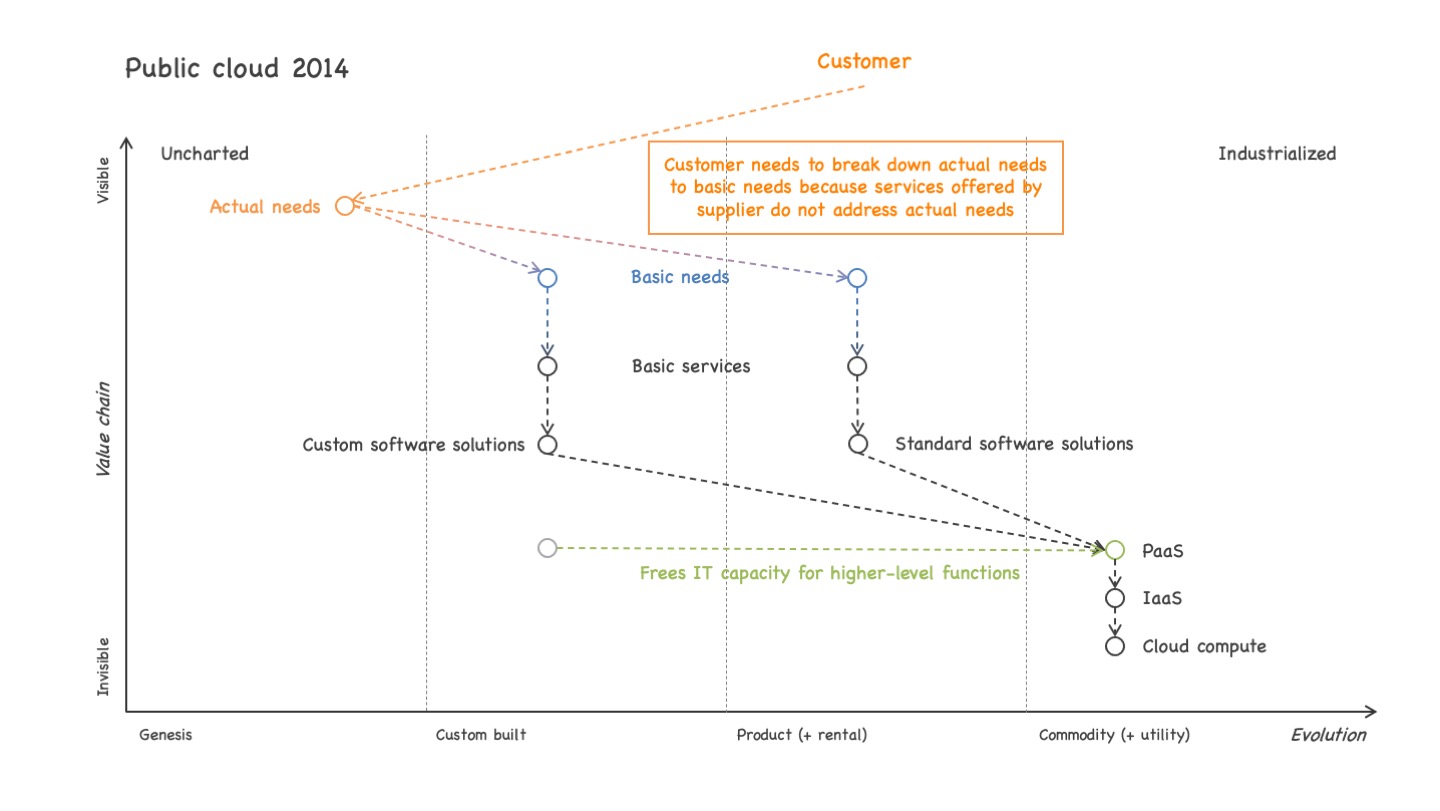

Public cloud ~2014

Around 2014, the public cloud offerings supported a good PaaS experience, i.e., also the development, test, deployment, operations and monitoring of software offered a good experience – at least for most usage scenarios. Online IDEs came a bit later, but for the most part the public cloud platform experience has become quite good around 2014.

Again, this made it possible to move the responsibility for managing most of the platform components needed to the public cloud provider of choice. This again freed IT capacity for higher-level functions that was needed before to set up and run all these platform services.

Still, there was no revolution.

Summary

We discussed the evolution of the public cloud offerings from their beginnings until around 2015. The cloud services started at the plain compute and storage level and worked their way up to the PaaS level. Until that point in time, the revolution that would start a bit later was not easy to spot. A few people already sensed the true potential of this evolution, but most people did not.

For most people, the public cloud offerings mostly affected the operations side of IT. All the stuff that was needed to build and run software had become available on demand.

Still, as the software development processes did not change up to that point in time, most people considered the advantage of public cloud offerings rather marginal compared to traditional outsourcing. Yes, the added elasticity would be nice. But as custom software development projects as well as standard software implementation projects both took a long time, a bit of upfront capacity planning would be good enough not to need the elasticity bonus of a public cloud provider.

And as long as you were not Netflix or some other company with a business model that heavily relied on elastic up- and down-scaling of their resources at runtime, the lack of elasticity also did not matter too much from that perspective.

Thus, no revolution.

But around 2017, public cloud has worked its way up the whole hardware and software stack and it started to affect massively the way, software was developed. And that is when the real revolution started. We will discuss this in the second post of this series. Stay tuned … ;)

-

Note that I took insurance as an example. You can find similar examples of a mismatch between customer needs and supplier service offerings in many other areas, too. ↩︎

-

As I already mentioned before: The evolution of the levels was not as clear as I describe them here. E.g., AWS launched SQS, an IaaS building block already in 2006. Still, in terms of completeness of the offerings regarding the different IT stack layers (hardware, IaaS, PaaS, …), the development was roughly as I described it. At least it is easier to comprehend this way … ;) ↩︎

-

Note that “freed capacity” is not as simple as it might sound. It means you need to retrain the people whose work was delegated to the public cloud to pick up other types of work. It is not a simple “Let me move these 10 ‘heads’ from here to there”. Unfortunately, way too often decision makers only think in terms of potential cost savings when they hear of freed capacity. Personally, I think with some careful planning and a good retraining it is able to leverage much bigger positive effects for the company than you can ever realize with some cost savings. Especially the psychological effects on the people not (yet) being laid off due to “this new technology” (here: public cloud) tend to be devastating in terms of productivity. ↩︎

-

Maybe they did it due to pressure of some influential members that only provide offerings at that limited level and want to protect their economic interests (a variant of the widespread lowest-common-denominator effect of standards committees). But that is just a wild guess … ;) ↩︎

Share this post

Twitter

Google+

Facebook

Reddit

LinkedIn

StumbleUpon

Pinterest

Email