Back to the future

How (mainframe) history is repeating

Back to the future

Recently I tried to catch up with the recent developments in platform engineering when I experienced once more a just too familiar déjà vu feeling. During my research, I came across the following definition of platform engineering:

“Platform engineering is the discipline of designing and building toolchains and workflows that enable self-service capabilities for software engineering organizations in the cloud-native era. Platform engineers provide an integrated product most often referred to as an “Internal Developer Platform” covering the operational necessities of the entire lifecycle of an application. An Internal Developer Platform (IDP) encompasses a variety of technologies and tools, integrated in a manner that reduces cognitive load on developers while retaining essential context and underlying technologies.” 1

When I let this sink in, it suddenly reminded me of … what I have seen on the mainframe about 30 years ago.

You must be kidding, you might think. This is brand new, hot stuff, targeted at top-notch, modern technology, the new kid on the IT block – and you are reminded of outdated technology from the past? 2

If you should feel like that, I can understand you. However, if you take a few steps back and abstract from the concrete technology used, you start seeing history repeating (assuming you are long enough in business).

From CICS to the Lambda scheduler

I had my first déjà vu experience regarding cloud technology when I reasoned about the AWS lambda scheduler 3 and the changed software development paradigm functions imply: You write short pieces of functionality that can be called from other pieces and that in turn can call other pieces. You place your piece of functionality in an internal repository and the scheduler runs it on demand, i.e., if anyone requires the function.

The scheduler also makes sure that multiple instances are run if needed. It scales up and down. It (pre-)loads and removes functions on demand. It makes sure called functions are executed and receive the data handed over to them. It handles potential runtime errors. And so on.

The developers can completely focus on the business logic they want to implement in the function. The “price” for that convenience is that they need to obey a few constraints. I.e., they may only communicate via events. The function must be stateless. If they want to persist state over a series of calls, they need to use a database. As the scheduler starts, stops and restarts functions as needed, it may stop a function at any time. Thus, developers must not create stateful functions.

All this results in a programming paradigm that is very different from monolithic applications run in virtual machines or micro-)services run in containers. Those are typically long(er)-running runtime artifacts which often are stateful and offer many more communication and collaboration options. But they also require developers to implement big parts of the state management, runtime deployment handling and error handling on their own. From a developer’s perspective, functions are more limited but also a lot easier in terms what they need to take care of. 4

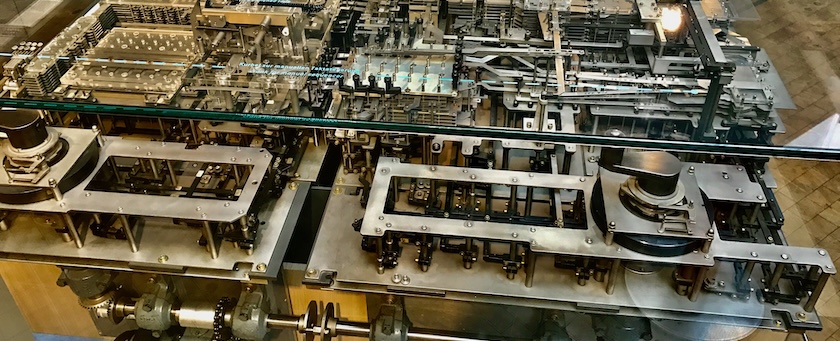

When I pondered this function programming paradigm, it reminded me a lot of the transaction monitors like CICS or IMS 5 from the mainframes: Developers wrote pieces of functionality (so-called “transactions” – which only indirectly had something to do with a database transactions). They stored the compiled transactions in an internal repository. The scheduler (called “transaction monitor”) ran the transactions on demand.

The scheduler also made sure that multiple instances are run if needed. It scaled up and down. It (pre-)loaded and removed transactions on demand. It made sure that called transactions were executed and received the data handed over to them. It handled potential runtime errors. And so on.

The developers could completely focus on the business logic they want to place into the transaction. To do so, they needed to obey a few constraints. I.e., they might only communicate via dummy control sections 6. If they wanted to persist state over a series of calls, they needed to use a database (and the transaction monitor also took care about the database transaction handling – here we get the connection to database transactions). As the transaction monitor started, stopped and restarted transactions as needed, it could stop a function at any time. Thus, developers had to make the transactions stateless. 7

If you read through that description and compare it with the Lambda function description, you realize a surprising amount of similarities – not only because I used the almost same words to describe it. And if you go back to the origins of the transaction monitors, people had similar problems on the mainframe, we face in (cloud-based) IT today.

One problem was the utilization of the hardware resources available. Compute resources were scarce and expensive. Therefore, it was relevant to utilize the hardware as good as possible. This was a tedious and complicated job, development teams needed to solve over and over again with every new piece of software. As a consequence, the vendors eventually solved the problem for their customers by developing transaction monitors. The monitors took care of optimizing the resource usage. To do so, they had to demand a few constraints regarding the pieces of functionality they were able to run.

As a side effect, this led to a novel software development paradigm which solved another problem of the time: Software development before transaction monitors was hard and cumbersome for most developers. The mental load was high as developers had to take care of many technical requirements and intricacies on top of the actual business logic they needed to implement.

Transaction monitors reduced the mental load significantly by taking care of many runtime related issues at the cost of limiting the options of the developers. It was like: You stick to these rules and I (the transaction monitor) will take care of everything that is not related to the business logic.

The Lambda scheduler and all the other function schedulers that came after it, took the same approach. While originally being invented to optimize resource utilization, they also led to a different development paradigm that reduces the mental load of developers significantly compared to, e.g., microservices. 8

From JCL to step functions

A had a similar déjà vu experience when looking at AWS step functions. In the beginning, I could not really make sense of it. A bit of a simple workflow engine. So what! But when looking closer at them, they started to remind me of JCL, the Job Control Language used on the mainframes to describe batch job networks. If you had a series of batch jobs that were connected to each other in some way (i.e., batch job B should only be started after batch job A successfully completed), you used JCL to describe those batch job workflows. 9

Step functions conceptually feel a bit like a very early and simple version of the JCL job network description capabilities. E.g., if you look at step functions, you can also use them to create (simple) job networks, e.g., in combination with AWS Batch which has a ready-to-use integration into step functions.

As most processing these days went from batch to online, step functions look (quite) a bit different than JCL, of course. Step functions do not only support batch processing, they also support online workflows, e.g., by connecting functions. So, they are a bit of JCL and a bit of a simple process engine (including functionalities for human intervention).

As a consequence, looking at JCL only is not sufficient to understand the ideas behind step functions. Still, looking at JCL and other job network description languages of the past is useful to get an idea how step functions might evolve in the future.

From mainframe SDEs to platform engineering

And when I read the definition of platform engineering above, I had another déjà vu moment. It reminded me of the software development environments (SDE) on the mainframes. The idea of those SDEs was to offer the developers a nice and easy software development experience.

Usually, all you had to do as a regular programmer (back then software developers were still called “programmers”) was to look up your piece of source code (or create a new one usually based on a predefined template and something, Microsoft much later started to call “wizards”), insert or update your code and then trigger the build process. The SDE took care of everything else.

The mental load using such a SDE was quite low regarding the complexity of the build and runtime infrastructure and middleware of a normal mainframe – which is exactly the aim of platform engineering: To reduce the mental load of a developer and to provide a “developer experience” that hides the complexities and intricacies of today’s build and runtime environments, allowing developers to focus on their actual tasks (which usually is implementing some business features).

Of course, most companies investing in platform engineering merely hope to increase developer productivity instead of caring about “developer experience” and mental load which I think is the wrong motivation. However, the motivation back in the mainframe days might have been the same, and the direction and the effects if successfully implemented are the same.

Again, maybe mainframe SDEs can give us some hints where platform engineering most likely is heading in the future. The underlying technology varies, but the underlying ideas feel very similar.

History repeating?

If you follow my reasoning, the question comes up if history repeats itself.

Somehow, it feels a lot like it. We currently face similar challenges in regular enterprise software development (with and without cloud), we faced about 40 to 50 years ago on the mainframe. Infrastructure and middleware had become really powerful but continuously harder to understand, master and fully utilize. Software continuously grew in size and became harder and harder to structure and maintain. The mental load to get things right continuously grew and most developers had a hard time really getting their heads wrapped around the complexity and intricacies of their more and more powerful build and runtime platforms.

Sounds familiar, doesn’t it? If you think about microservices, containers, Kubernetes (including its huge ecosystem), polyglot persistence, cloud, event busses, SPAs, CI/CD pipelines (in all their flavors), etc., we face a lot of developers who (rightfully) feel overstrained. They are expected to implement some business features, but to do so they need to understand tons of details of the underlying build and runtime platform. Writing a simple “Hello world” for an average company’s IT landscape often requires you days, if not weeks to learn the infrastructure and middleware basics you need to know to do so. And we have not talked yet about the more advanced topics …

Hence, developments like platform engineering or function schedulers including the programming paradigm they imply are not surprising for me.

Still, I would not simply call it “history repeating”. I would rather follow an idea from Gunter Dueck. He explained the déjà vu feeling the following way (based on how I remember it):

Needs and solutions sort of chase each other. Solutions are created based on needs. On the other hand, existing solutions bear new needs. And usually, they chase each other in circles – which sometimes leads to the impression we have seen the same thing before. This happens whenever needs and solutions completed a whole rotation.

But in reality we do not end up at the same place we were before. The continuous chasing creates progress. Gunter visualized it using two interlocked spirals that continuously grow. Whenever we complete a whole rotation, we have the same view but from a higher place. Thus, history is not really repeating. We rather completed a whole rotation and re-arrived at a similar place, but still different.

The future of software developers

But even if we are at a different height using different technology, we can observe a very similar development. And we can use this observation to make some not too unlikely predictions regarding the future of software engineers by looking at the developments from the past.

If we look at the roles of software developers in a mainframe context in the past, we see the following primary roles (names may vary depending on area or industry):

- Most developers were application programmers. Their job was to implement business features. They did not need to know how the build and delivery processes worked internally. They only needed to know how to use them – how to find the code they needed to work on and which number to select in their SDE menu to build the software they just implemented. Everything else, the SDE took care of. Typically, 85%-90% of all developers were application programmers.

- Some developers were organization programmers. They had more privileges than application programmers. Typically, they took over the more challenging parts of application development, especially if it required a bit of understanding of the internals of the mainframe. They also created job descriptions and networks. They often created and tweaked the templates, application programmers used for their programming tasks. Sometimes, they even had the privileges to work on selected SDE internals. This role is roughly comparable to a “DevOps developer” of today who does not only implement business features, but also takes care about implementing the required Kubernetes descriptors or Helm charts, takes care of the CI/CD pipeline. I.e., organization programmers had a deeper understanding regarding the inner workings of the SDE and the runtime environments. Often, they also held sort of a lead developer role. Typically, around 10% of all developers were organization programmers.

- Very few developers were system programmers. They basically had access to everything on the mainframe and the privileges to change everything. This role is basically comparable to a root user of a *nix machine, only that there typically were fewer system programmers than root users on a *nix machine – especially because you needed such unlimited privileges extremely seldom to get your job done on a mainframe. Hence, the system programmer role was restricted to the few developers who set up everything for all the other developers. Typically 1%-2% of all developers were system programmers.

If we look at this distinction, I think one of the big goals of platform engineering is to reduce the mental load of developers who are meant to be application programmers but are forced to acquire the skills and knowledge of advanced organization programmers because they involuntarily need to know a lot about the internals of the build and runtime platform they use. The existing abstractions are so poor that most developers need to focus quite a lot on topics that are not related to implementing business features.

Often, that leads to stress and mental overload. At the one hand, it is expected that you complete the assigned business features as quickly as possible. On the other hand, you need to know (and often configure) low-level details of your platform to get the job done. It is a bit as if you need to be a mechanical engineer to drive a car, including manually adjusting the injection timing while accelerating and slowing down.

Platform engineering aims at creating better abstractions for the implementation, build and runtime processes, allowing software developers to focus more on turning business needs into code than dealing with low-level technical abstractions.

In other words: While we currently involuntarily have a ratio of 98% organization programmers and 2% system programmers, platform engineering aims at coming back to the ratio of 85%-90% application programmers, around 10% organization programmers and 1%-2% system programmers, we know from the mainframe era (of course using different names for the roles).

Adding AI to the picture

When I took a step back and added AI – or more precisely: AI-supported or AI-based software development – to the picture, a new question came to my mind. The question is based on the following line of reasoning:

- The current promises and expectations are that AI will be able to implement standard software development tasks in the future.

- Implementing standard software development tasks is what application programmers do.

- Platform engineering will turn most developers in application programmers.

This leads to the question:

Will platform engineering boost replacing software developers with AI based software development solutions in the future?

Or asking the question from a different point of view:

Is platform engineering a paradox in itself because if it is successful, it will eliminate its own target group?

To be frank: This reasoning is quite pithy. Also, it does not consider several existing uncertainties as well as many nuances.

Still, I think the question is not completely absurd. Both developments – platform engineering and AI-supported (or in the future AI-based) software development – are driven independently, mostly ignoring each other. But as soon as you combine these two developments based on their aims and promises, the question pops up.

This does not mean, we should abandon platform engineering – not at all. The mental load, most developers currently experience, is way too high because most modern technology and tooling offers terribly poor abstractions. We need to do something about it.

Still, when looking at the bigger picture, at least for me this does not only trigger a déjà vu feeling but also a “Spirits that I’ve summoned …” feeling. 10

I do not know where the current developments will lead, to which degree the vendor promises and expectations regarding AI and platform engineering will be fulfilled. However, looking around I sometimes get the impression we are happily sawing at the branch we are sitting on and frenetically celebrate everyone who hands us a sharper saw. In these moments, I deeply hope, I am wrong. Future will tell … 11

-

See https://platformengineering.org/blog/what-is-platform-engineering. ↩︎

-

Personally, I do not consider mainframe technology outdated. Mainframes and their software are impressive pieces of hardware of software engineering and we still can learn a lot from them. However, due to developments not necessarily related to technology excellence, mainframes are becoming more and more a thing of the past. ↩︎

-

I use technologies from AWS as an example. Most other hyperscalers have similar offerings in place. ↩︎

-

Of course, debugging functions can still be a tedious job – also part due the platform that hides the runtime environment from the developers a lot better than, e.g., a regular container scheduler like Kubernetes or a virtual machine. But most likely such issues will be solved over time. ↩︎

-

I use technologies fRom IBM as an example because I worked with IBM mainframe computers most of the time. Even if I also have a few experiences regarding mainframes from other vendors, they are too limited to trigger any déjà vu experience. ↩︎

-

For the German readers: If you did (or still do) mainframe software development and always worked with the German documentation from IBM (which is very widespread in German mainframe development): The German term for “dummy control section” is “Copystrecke”. ↩︎

-

I wrote this section in the past tense because I experienced those things roughly 30 years ago. Nevertheless, all these concepts are still valid today if you are doing software development on a mainframe using a transaction monitor. ↩︎

-

Interestingly, Google tried a similar approach with their GCE (Google Compute Engine) already in 2012: You stick to a few rules as a developer and the runtime environment takes care of the complicated parts of running applications in a cloud environment. However, the offering seemed to have come to early. Most developers simply complained about the perceived restrictions and turned towards the (way more complicated) microservices and container schedulers like Kubernetes. The time for such an approach was not yet ripe. ↩︎

-

JCL did a lot more than just describing job networks as it was contained all information needed to run a batch job. This can be roughly compared to a *nix shell script that configures and runs a series of headless batch programs. For more information, please refer to the Wikipedia page or the JCL documentation provided by IBM. ↩︎

-

Those who understand German might rather want to use the original words from Johann Wolfgang von Goethe: “Die ich rief, die Geister [werd’ ich nun nicht los.]” ↩︎

-

Again, this is not a call to abandon developments like platform engineering or AI-supported software development. However, I think we should not blindly follow every new hype (which is often reinforced by the profiteers of the hype). Instead, we should try to put new ideas in a broader context and ponder its potential consequences. This helps us to use and maybe even shape new technologies in a more responsible way. The preferred way of the profiteers is not always the best way for everyone else, including us … ↩︎

Share this post

Twitter

Google+

Facebook

Reddit

LinkedIn

StumbleUpon

Pinterest

Email